I have several files of measured data, which I want to open automatically, take some values out and put them all together in one dataframe.

First I search for the filenames, open them one by one (in a for loop) and set them together. The code works fine. But as there are a lot of files, it takes way too long. At the moment I can’t think ou any other way to do this…My question is, is there an option to fasten up the process? Maybe without using loops? Escpecially avoiding the second loop would improve the performance.

I tried to make a minimal example of the code. Some lines (such as data_s) dont make a lot of sense in this example, but in reality they do ;-)

all.files <- list.files(recursive = T)

df <- data.frame(matrix(, nrow=1000, ncol=242))

for (i in 1:length(all.files) {

Data <- read.table(all.files[i]), header=F)

name <- Data[i,2]

data_s <- i+6

for (k in 1:240){

df[data_s+k,k+2] <- Data[24+k,3]

}

assign(name,df)

rm(name,df)

}

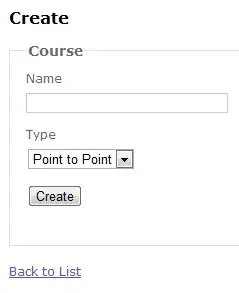

thats the structure of "Data":

thats how my final file ("df") should look like:

thanks a lot for your help!