I had seen this great answer on image conversions using __m128i, and thought I'd try and use AVX2 to see if I could get it any faster. The task is taking an input RGB image and converting it to RGBA (note the other question is BGRA, but that's not really a big difference...).

I can include more code if desired, but this stuff gets quite verbose and I'm stuck on something seemingly very simple. Suppose for this code that everything is 32-byte aligned, compiled with -mavx2, etc.

Given an input uint8_t *source RGB and output uint8_t *destination RGBA, it goes something like this (just trying to fill a quarter of the image in stripes [since this is vector land]).

#include <immintrin.h>

__m256i *src = (__m256i *) source;

__m256i *dest = (__m256i *) destination;

// for this particular image

unsigned width = 640;

unsigned height = 480;

unsigned unroll_N = (width * height) / 32;

for(unsigned idx = 0; idx < unroll_N; ++idx) {

// Load first portion and fill all of dest[0]

__m256i src_0 = src[0];

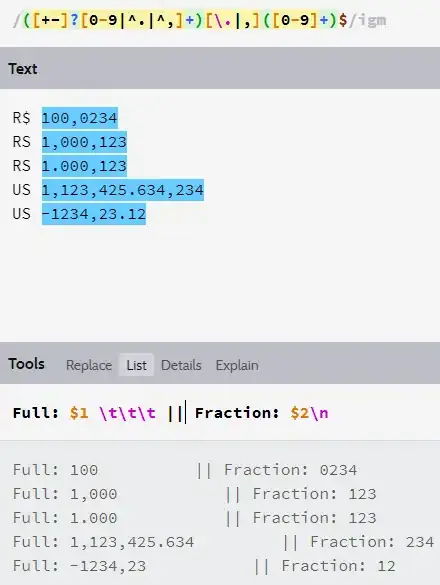

__m256i tmp_0 = _mm256_shuffle_epi8(src_0,

_mm256_set_epi8(

0x80, 23, 22, 21,// A07 B07 G07 R07

0x80, 20, 19, 18,// A06 B06 G06 R06

0x80, 17, 16, 15,// A05 B05 G05 R05

0x80, 14, 13, 12,// A04 B04 G04 R04

0x80, 11, 10, 9,// A03 B03 G03 R03

0x80, 8, 7, 6,// A02 B02 G02 R02

0x80, 5, 4, 3,// A01 B01 G01 R01

0x80, 2, 1, 0 // A00 B00 G00 R00

)

);

dest[0] = tmp_0;

// move the input / output pointers forward

src += 3;

dest += 4;

}// end for

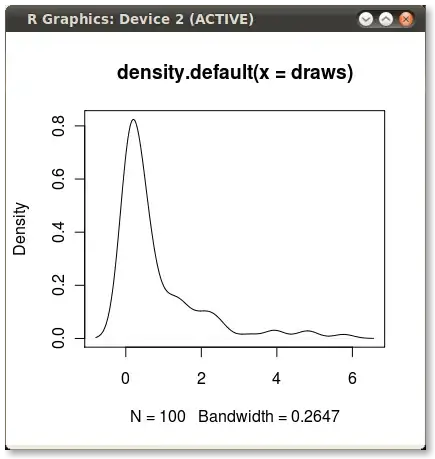

This doesn't even actually work. There are stripes showing up in each "quarter".

- My understanding is

0x80should be used to create0x00in the mask- It doesn't really even matter what value gets there (it's the alpha channel, in the real code it gets

OR'd with0xfflike the linked answer).

- It doesn't really even matter what value gets there (it's the alpha channel, in the real code it gets

- It somehow seems to be related to rows

04to07, if I make them all0x80leaving just00-03the inconsistencies go away.- But of course, I'm not copying everything I need to.

What am I missing here? Like is it possible I ran out of registers or something? I'd be very surprised by that...

Using

_mm256_set_epi8(

// 0x80, 23, 22, 21,// A07 B07 G07 R07

// 0x80, 20, 19, 18,// A06 B06 G06 R06

// 0x80, 17, 16, 15,// A05 B05 G05 R05

// 0x80, 14, 13, 12,// A04 B04 G04 R04

0x80, 0x80, 0x80, 0x80,

0x80, 0x80, 0x80, 0x80,

0x80, 0x80, 0x80, 0x80,

0x80, 0x80, 0x80, 0x80,

0x80, 11, 10, 9,// A03 B03 G03 R03

0x80, 8, 7, 6,// A02 B02 G02 R02

0x80, 5, 4, 3,// A01 B01 G01 R01

0x80, 2, 1, 0 // A00 B00 G00 R00

)