I've managed to delete all entities stored using Core Data (following this answer).

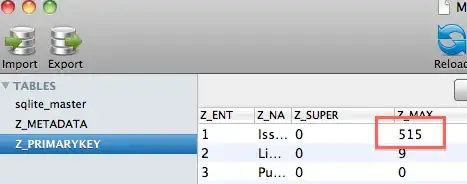

The problem is, I've noticed the primary key is still counting upwards. Is there a way (without manually writing a SQL query) to reset the Z_MAX value for the entity? Screenshot below to clarify what I mean.

The value itself isn't an issue, but I'm just concerned that at some point in the future the maximum integer may be reached and I don't want this to happen. My application syncs data with a web service and caches it using core data, so potentially the primary key may increase by hundreds/thousands at a time. Deleting the entire Sqlite DB isn't an option as I need to retain some of the information for other entities.

I've seen the 'reset' method, but surely that will reset the entire Sqlite DB? How can I reset the primary key for just this one set of entities? There are no relationships to other entities with the primary key I want to reset.