I am trying to find a 3D model from 2 images taken from the same camera using OpenCV with C++. I followed this method. I am still not able to rectify mistake in R and T computation.

Image 1: With Background Removed for eliminating mismatches

Image 2: Translated only in X direction wrt Image 1 With Background Removed for eliminating mismatches

I have found the Intrinsic Camera Matrix (K) using MATLAB Toolbox. I found it to be :

K=

[3058.8 0 -500 0 3057.3 488 0 0 1]

All image matching keypoints (using SIFT and BruteForce Matching, Mismatches Eliminated) were aligned wrt center of image as follows:

obj_points.push_back(Point2f(keypoints1[symMatches[i].queryIdx].pt.x - image1.cols / 2, -1 * (keypoints1[symMatches[i].queryIdx].pt.y - image1.rows / 2)));

scene_points.push_back(Point2f(keypoints2[symMatches[i].trainIdx].pt.x - image1.cols / 2, -1 * (keypoints2[symMatches[i].trainIdx].pt.y - image1.rows / 2)));

From Point Correspondeces, I found out Fundamental Matrix Using RANSAC in OpenCV

Fundamental Matrix:

[0 0 -0.0014 0 0 0.0028 0.00149 -0.00572 1 ]

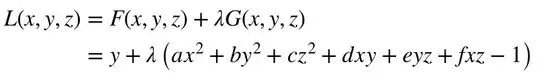

Essential Matrix obtained using:

E = (camera_Intrinsic.t())*f*camera_Intrinsic;

E obtained:

[ 0.0094 36.290 1.507 -37.2245 -0.6073 14.71 -1.3578 -23.545 -0.442]

SVD of E:

E.convertTo(E, CV_32F);

Mat W = (Mat_<float>(3, 3) << 0, -1, 0, 1, 0, 0, 0, 0, 1);

Mat Z = (Mat_<float>(3, 3) << 0, 1, 0, -1, 0, 0, 0, 0, 0);

SVD decomp = SVD(E);

Mat U = decomp.u;

Mat Lambda = decomp.w;

Mat Vt = decomp.vt;

New Essential Matrix for epipolar constraint:

Mat diag = (Mat_<float>(3, 3) << 1, 0, 0, 0, 1, 0, 0, 0, 0);

Mat new_E = U*diag*Vt;

SVD new_decomp = SVD(new_E);

Mat new_U = new_decomp.u;

Mat new_Lambda = new_decomp.w;

Mat new_Vt = new_decomp.vt;

Rotation from SVD:

Mat R1 = new_U*W*new_Vt;

Mat R2 = new_U*W.t()*new_Vt;

Translation from SVD:

Mat T1 = (Mat_<float>(3, 1) << new_U.at<float>(0, 2), new_U.at<float>(1, 2), new_U.at<float>(2, 2));

Mat T2 = -1 * T1;

I was getting the R matrices to be :

R1:

[ -0.58 -0.042 0.813 -0.020 -0.9975 -0.066 0.81 -0.054 0.578]

R2:

[ 0.98 0.0002 0.81 -0.02 -0.99 -0.066 0.81 -0.054 0.57 ]

Translation Matrices:

T1:

[0.543

-0.030

0.838]

T2:

[-0.543

0.03

-0.83]

Please clarify wherever there is a mistake.

This 4 sets of P2 matrix R|T with P1=[I] are giving incorrect triangulated models.

Also, I think the T matrix obtained is incorrect, as it was supposed to be only x shift and no z shift.

When tried with same image1=image2 -> I got T=[0,0,1]. What is the meaning of Tz=1? (where there is no z shift as both images are same)

And should I be aligning my keypoint coordinates with image center, or with principle focus obtained from calibration?