Is there any relationship between blockIdx and the order in which thread blocks are executed on the GPU device?

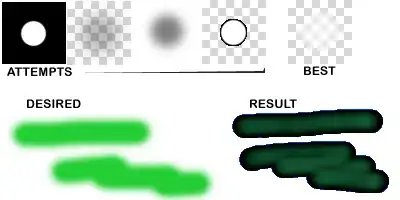

My motivation is that I have a kernel in which multiple blocks will read from the same location in global memory, and it would be nice if these blocks would run concurrently (because L2 cache hits are nice). In deciding how to organize these blocks into a grid, would it be safe to say that blockIdx.x=0 is more likely to run concurrently with blockIdx.x=1 than with blockIdx.x=200? And that I should try to assign consecutive indices to blocks that read from the same location in global memory?

To be clear, I'm not asking about inter-block dependencies (as in this question) and the thread blocks are completely independent from the point of view of program correctness. I'm already using shared memory to broadcast data within a block, and I can't make the blocks any larger.

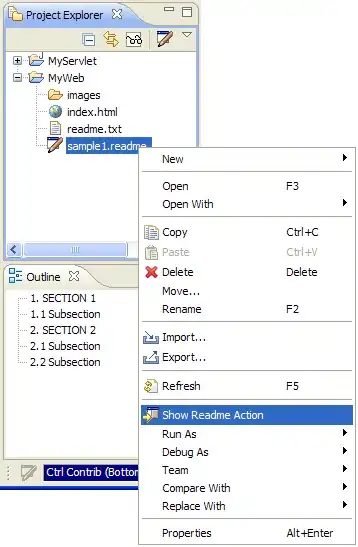

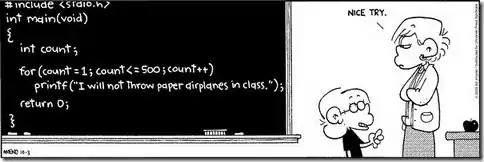

EDIT: Again, I am well aware that

Thread blocks are required to execute independently: It must be possible to execute them in any order, in parallel or in series.

and the blocks are fully independent---they can run in any order and produce the same output. I am just asking if the order in which I arrange the blocks into a grid will influence which blocks end up running concurrently, because that does affect performance via L2 cache hit rate.