I am trying to implement least squares:

I have: $y=\theta\omega$

The least square solution is \omega=(\theta^{T}\theta)^{-1}\theta^{T}y

I tryied:

import numpy as np

def least_squares1(y, tx):

"""calculate the least squares solution."""

w = np.dot(np.linalg.inv(np.dot(tx.T,tx)), np.dot(tx.T,y))

return w

The problem is that this method becomes quickly unstable (for small problems its okay)

I realized that, when I compared the result to this least square calculation:

import numpy as np

def least_squares2(y, tx):

"""calculate the least squares solution."""

a = tx.T.dot(tx)

b = tx.T.dot(y)

return np.linalg.solve(a, b)

Compare both methods: I tried to fit data with a polynomial of degree 12 [1, x,x^2,x^3,x^4...,x^12]

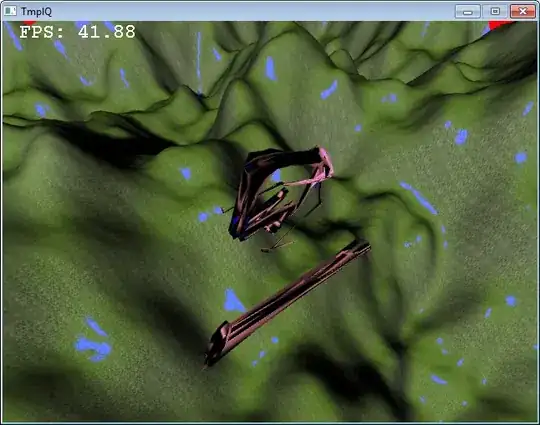

First method:

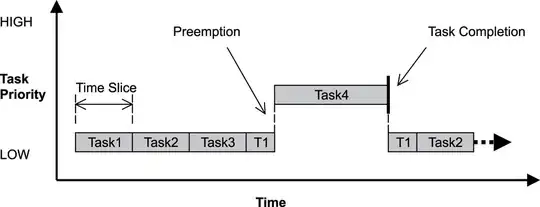

Second method:

Do you know why the first method diverges for large polynomials ?

P.S. I only added "import numpy as np" for your convinience, if you want to test the functions.