Here some hacky demo, trying to learn something from this answer (SO's clustering expert).

I changed some things and won't give any guarantees:

- labeling-method

- important: i'm deviating from his underlying theory here (his approach should be superior)!

- i'm just labeling according to the nearest-center (like in kmeans)

- argrelextrema's comparator (needed for your data which has not unique values!)

- using heuristic bandwith-selection (by not setting a constant!)

This code uses kernel-density-estimation to obtain a 1d-clustering which chooses k automatically. Now it sounds, you wan't to always use k=2, but this here is more general.

So using it for your data:

import numpy as np

from scipy.signal import argrelextrema

from scipy.spatial.distance import cdist

from sklearn.neighbors.kde import KernelDensity

import matplotlib.pyplot as plt

import matplotlib.cm as cm

x = np.array([[ 17.7804203 ],

[ 0.08901367],

[ 0.08901367],

[ 0.08901367],

[ 0.08901367],

[ 17.7335453 ],

[ 17.72670937],

[ 17.72670937],

[ 17.75502968],

[ 17.81459999],

[ 17.89565468],

[ 17.98159218],

[ 0.08901367],

[ 0.08901367],

[ 0.08901367],

[ 0.08901367],

[ 0.08901367],

[ 0.08901367],

[ 0.08901367],

[ 0.08901367],

[ 0.08901367],

[ 0.08901367],

[ 0.08901367],

[ 17.9210453 ],])

# [5.4],

# [5.41],

# [5.3],

# [5.35]])

np.random.shuffle(x) # just for demo

kde = KernelDensity(kernel='gaussian').fit(x) # bandwith heuristic

s = np.linspace(np.amin(x), np.amax(x))

e = kde.score_samples(s.reshape(-1,1))

ma = argrelextrema(e, np.greater_equal )[0]

s_ma = np.array([s[ma[i]] for i in range(len(ma))]) # cluster centers

n_clusters = len(s_ma) # n_clusters

# class labeling

Y = cdist(x, s_ma[np.newaxis].T)

labels = np.empty(len(x), dtype=int)

for x_ind in range(len(x)):

labels[x_ind] = np.argmin(Y[x_ind, :])

# plot classification

xs = np.arange(len(x))

colors = cm.rainbow(np.linspace(0, 1, n_clusters))

for label in range(n_clusters):

inds = np.where(labels == label)[0]

plt.scatter(xs[inds], x[inds], color=colors[label], s=40)

plt.show()

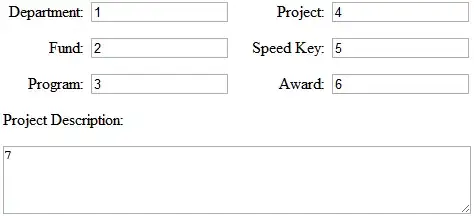

output this classification (remember: i permuted the x-values):

Now let's add 4 new points around 5 (as i'm lazy and added them to the end, i used the mentioned permutation). Just uncomment those lines in the code.

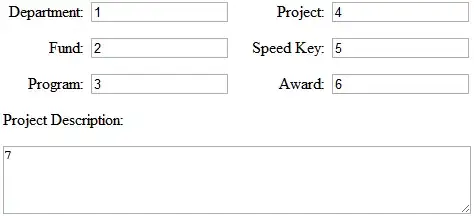

Output:

So in the first case, we obtained two clusters, now we got three (i swear: n_clusters=3 although my matplotlib code changes the colors somehow...)!

Feel free to play around with this. You might use my approach in the comment, using the labels to extract classes, calculate the variance and throw away the class with the lowest one. But that's depending on your task of course.

For example this code added at the end:

# Calculate variance for each class

variances = np.array([np.var(x[np.where(labels == i)[0]]) for i in

range(n_clusters)])

print(np.hstack([variances[np.newaxis].T, s_ma[np.newaxis].T]))

would output:

[[ 1.92592994e-34 8.90136700e-02] # variance | cluster_mean

[ 1.92500000e-03 5.20117896e+00]

[ 8.05793565e-03 1.79815922e+01]]

which in your case might be interpreted as: throw class 0 away (minimum variance or some thresholding-check: remove all with var < x).