I have a data frame with 5 variables: Lot / Wafer / Serial Number / Voltage / Amplification. In this data frame there are 1020 subsets grouped by Serial_number. Each subset has a certain number of measurement data points (Amplification against voltage).

I fit the data with

summary(fit2.lme <- lmer(log(log(Amplification)) ~ poly(Voltage, 3) + (poly(Voltage, 1) | Serial_number),

+ data = APD))

which yields:

Linear mixed model fit by REML ['lmerMod']

Formula: log(log(Amplification)) ~ poly(Voltage, 3) + (poly(Voltage, 1) | Serial_number)

Data: APD

REML criterion at convergence: 35286.1

Scaled residuals:

Min 1Q Median 3Q Max

-20.7724 -0.2438 -0.1297 0.2434 13.2663

Random effects:

Groups Name Variance Std.Dev. Corr

Serial_number (Intercept) 1.439e-02 0.1199

poly(Voltage, 1) 2.042e+03 45.1908 -0.76

Residual 8.701e-02 0.2950

Number of obs: 76219, groups: Serial_number, 1020

Fixed effects:

Estimate Std. Error t value

(Intercept) 5.944e-02 3.914e-03 15.2

poly(Voltage, 3)1 5.862e+02 1.449e+00 404.5

poly(Voltage, 3)2 -1.714e+02 3.086e-01 -555.4

poly(Voltage, 3)3 4.627e+01 3.067e-01 150.8

Correlation of Fixed Effects:

(Intr) p(V,3)1 p(V,3)2

ply(Vlt,3)1 -0.713

ply(Vlt,3)2 0.015 -0.004

ply(Vlt,3)3 0.004 0.012 0.018

and when I add a higher polynomial in the random effects I get a warning:

> summary(fit3.lme <- lmer(log(log(Amplification)) ~ poly(Voltage, 3) + (poly(Voltage, 2) | Serial_number),

+ data = APD))

Linear mixed model fit by REML ['lmerMod']

Formula: log(log(Amplification)) ~ poly(Voltage, 3) + (poly(Voltage, 2) | Serial_number)

Data: APD

REML criterion at convergence: 16285.9

Scaled residuals:

Min 1Q Median 3Q Max

-20.5042 -0.2393 -0.0697 0.3165 13.9634

Random effects:

Groups Name Variance Std.Dev. Corr

Serial_number (Intercept) 1.584e-02 0.1259

poly(Voltage, 2)1 1.777e+03 42.1536 -0.67

poly(Voltage, 2)2 1.579e+03 39.7365 0.87 -0.95

Residual 6.679e-02 0.2584

Number of obs: 76219, groups: Serial_number, 1020

Fixed effects:

Estimate Std. Error t value

(Intercept) 5.858e-02 4.062e-03 14.4

poly(Voltage, 3)1 5.938e+02 1.351e+00 439.5

poly(Voltage, 3)2 -1.744e+02 1.276e+00 -136.7

poly(Voltage, 3)3 5.719e+01 2.842e-01 201.2

Correlation of Fixed Effects:

(Intr) p(V,3)1 p(V,3)2

ply(Vlt,3)1 -0.641

ply(Vlt,3)2 0.825 -0.906

ply(Vlt,3)3 -0.001 0.030 -0.004

convergence code: 1

Model failed to converge with max|grad| = 2.22294 (tol = 0.002, component 1)

Model is nearly unidentifiable: large eigenvalue ratio

- Rescale variables?

Warning messages:

1: In optwrap(optimizer, devfun, getStart(start, rho$lower, rho$pp), :

convergence code 1 from bobyqa: bobyqa -- maximum number of function evaluations exceeded

2: In checkConv(attr(opt, "derivs"), opt$par, ctrl = control$checkConv, :

Model failed to converge with max|grad| = 2.22294 (tol = 0.002, component 1)

3: In checkConv(attr(opt, "derivs"), opt$par, ctrl = control$checkConv, :

Model is nearly unidentifiable: large eigenvalue ratio

- Rescale variables?

The data is as following (can provide the complete data of course, if desired). It includes 77479 observables of 6 variables:

'data.frame': 77479 obs. of 6 variables:

$ Serial_number: num 9.12e+08 9.12e+08 9.12e+08 9.12e+08 9.12e+08 ...

$ Lot : int 9 9 9 9 9 9 9 9 9 9 ...

$ Wafer : int 912 912 912 912 912 912 912 912 912 912 ...

$ Amplification: num 1 1 1.01 1.01 1.01 ...

$ Voltage : num 25 30 34.9 44.9 49.9 ...

and the data itself looks like:

Serial_number Lot Wafer Amplification Voltage

1 912009913 9 912 1.00252 24.9681

2 912009913 9 912 1.00452 29.9591

3 912009913 9 912 1.00537 34.9494

(...)

73 912009913 9 912 918.112 375.9850

74 912009913 9 912 1083.74 377.9990

75 912009897 9 912 1.00324 19.9895

76 912009897 9 912 1.00449 29.9777

(...)

What does the warnings mean? According to the anova the fit3.lme model describes the data better:

> anova(fit3.lme, fit2.lme)

refitting model(s) with ML (instead of REML)

Data: APD

Models:

fit2.lme: log(log(Amplification)) ~ poly(Voltage, 3) + (poly(Voltage, 1) |

fit2.lme: Serial_number)

fit3.lme: log(log(Amplification)) ~ poly(Voltage, 3) + (poly(Voltage, 2) |

fit3.lme: Serial_number)

Df AIC BIC logLik deviance Chisq Chi Df Pr(>Chisq)

fit2.lme 8 35294 35368 -17638.9 35278

fit3.lme 11 16264 16366 -8121.1 16242 19036 3 < 2.2e-16 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Warning message:

In optwrap(optimizer, devfun, x@theta, lower = x@lower, calc.derivs = TRUE, :

convergence code 1 from bobyqa: bobyqa -- maximum number of function evaluations exceeded

Therefore I would like to use that model but I stuck in the warning.

update:

center and scale predictors

ss.CS<- transform(APD, Voltage=scale(Voltage))

> fit31.lme<- update(fit3.lme, data=ss.CS)

Error in poly(dots[[i]], degree, raw = raw, simple = raw) :

'degree' must be less than number of unique points

Also for the other variable (don't know for which it makes sense)

> ss.CS<- transform(APD, Amplitude=scale(Amplitude))

Error in scale(Amplitude) : object 'Amplitude' not found

> ss.CS<- transform(APD, Amplification=scale(Amplification))

> fit31.lme<- update(fit3.lme, data=ss.CS)

Warning messages:

1: In log(Amplification) : NaNs produced

2: In log(log(Amplification)) : NaNs produced

3: In log(Amplification) : NaNs produced

4: In log(log(Amplification)) : NaNs produced

5: In log(Amplification) : NaNs produced

6: In log(log(Amplification)) : NaNs produced

7: Some predictor variables are on very different scales: consider rescaling

check singularity

> diag.vals<- getME(fit3.lme, "theta")[getME(fit3.lme, "lower")==0]

> any(diag.vals<- 1e-6)

[1] TRUE

Warning message:

In any(diag.vals <- 1e-06) : coercing argument of type 'double' to logical

compute gradient and Hessian with Richardson extrapolation

> devfun<- update(fit3.lme, devFunOnly=TRUE)

> if(isLMM(fit3.lme)){

+ pars<- getME(fit3.lme, "theta")

+ } else {

+ pars<- getME(fit3.lme, c("theta", "fixef"))

+ }

> if (require("numDeriv")) {

+ cat("hess:\n"); print(hess <- hessian(devfun, unlist(pars)))

+ cat("grad:\n"); print(grad <- grad(devfun, unlist(pars)))

+ cat("scaled gradient:\n")

+ print(scgrad <- solve(chol(hess), grad))

+ }

Loading required package: numDeriv

hess:

[,1] [,2] [,3] [,4] [,5] [,6]

[1,] 39137.840764 -56.189442277 -1.348127e+02 3.789427141 25.456612941 -3.806942811

[2,] -56.189442 0.508077776 6.283795e-01 -0.068882737 -0.056159369 0.003228274

[3,] -134.812704 0.628379462 1.061584e+00 -0.079620905 -0.152816413 0.007457255

[4,] 3.789427 -0.068882737 -7.962090e-02 0.516054976 0.534346634 0.001513168

[5,] 25.456613 -0.056159369 -1.528164e-01 0.534346634 0.901191745 -0.002344407

[6,] -3.806943 0.003228274 7.457255e-03 0.001513168 -0.002344407 0.179283416

grad:

[1] -22.9114985 2.2229416 -0.2959238 0.6790044 -0.2343368 -0.4020556

scaled gradient:

[1] -0.1123624 4.4764140 -0.8777938 1.3980054 -0.4223921 -0.9508207

> fit3.lme@optinfo$derivs

$gradient

[1] -22.9118920 2.2229424 -0.2959264 0.6790037 -0.2343360 -0.4020605

$Hessian

[,1] [,2] [,3] [,4] [,5] [,6]

[1,] 39137.915527 -56.20745850 -134.87176514 3.74780273 25.47540283 -3.79016113

[2,] -56.207458 0.44262695 0.61462402 -0.04736328 -0.06585693 0.02130127

[3,] -134.871765 0.61462402 1.04296875 -0.10467529 -0.23223877 0.05438232

[4,] 3.747803 -0.04736328 -0.10467529 0.52026367 0.50909424 -0.02130127

[5,] 25.475403 -0.06585693 -0.23223877 0.50909424 0.68481445 -0.02044678

[6,] -3.790161 0.02130127 0.05438232 -0.02130127 -0.02044678 0.07617188

4. restart the fit from the original value (or a slightly perturbed value):

> fit3.lme.restart <- update(fit3.lme, start=pars)

> summary(fit3.lme.restart)

Linear mixed model fit by REML ['lmerMod']

Formula: log(log(Amplification)) ~ poly(Voltage, 3) + (poly(Voltage, 2) | Serial_number)

Data: APD

REML criterion at convergence: 16250.3

Scaled residuals:

Min 1Q Median 3Q Max

-20.4868 -0.2404 -0.0697 0.3166 13.9464

Random effects:

Groups Name Variance Std.Dev. Corr

Serial_number (Intercept) 1.823e-02 0.1350

poly(Voltage, 2)1 2.124e+03 46.0903 -0.77

poly(Voltage, 2)2 1.937e+03 44.0164 0.90 -0.96

Residual 6.668e-02 0.2582

Number of obs: 76219, groups: Serial_number, 1020

Fixed effects:

Estimate Std. Error t value

(Intercept) 0.05823 0.00434 13.4

poly(Voltage, 3)1 593.83396 1.47201 403.4

poly(Voltage, 3)2 -174.61257 1.40711 -124.1

poly(Voltage, 3)3 57.15901 0.28427 201.1

Correlation of Fixed Effects:

(Intr) p(V,3)1 p(V,3)2

ply(Vlt,3)1 -0.735

ply(Vlt,3)2 0.868 -0.927

ply(Vlt,3)3 -0.001 0.028 -0.003

5. try all available optimizers

> source(system.file("utils", "allFit.R", package="lme4"))

Loading required package: optimx

Loading required package: dfoptim

> fit3.lme.all <- allFit(fit3.lme)

bobyqa : [OK]

Nelder_Mead : [OK]

nlminbw : [OK]

nmkbw : [OK]

optimx.L-BFGS-B : [OK]

nloptwrap.NLOPT_LN_NELDERMEAD : [OK]

nloptwrap.NLOPT_LN_BOBYQA : [OK]

Warning messages:

1: In checkConv(attr(opt, "derivs"), opt$par, ctrl = control$checkConv, :

unable to evaluate scaled gradient

2: In checkConv(attr(opt, "derivs"), opt$par, ctrl = control$checkConv, :

Model failed to converge: degenerate Hessian with 1 negative eigenvalues

3: In checkConv(attr(opt, "derivs"), opt$par, ctrl = control$checkConv, :

unable to evaluate scaled gradient

4: In checkConv(attr(opt, "derivs"), opt$par, ctrl = control$checkConv, :

Model failed to converge: degenerate Hessian with 1 negative eigenvalues

5: In checkConv(attr(opt, "derivs"), opt$par, ctrl = control$checkConv, :

unable to evaluate scaled gradient

6: In checkConv(attr(opt, "derivs"), opt$par, ctrl = control$checkConv, :

Model failed to converge: degenerate Hessian with 1 negative eigenvalues

7: In checkConv(attr(opt, "derivs"), opt$par, ctrl = control$checkConv, :

unable to evaluate scaled gradient

8: In checkConv(attr(opt, "derivs"), opt$par, ctrl = control$checkConv, :

Model failed to converge: degenerate Hessian with 1 negative eigenvalues

ss <- summary(fit3.lme.all)

> ss$ fixef ## extract fixed effects

(Intercept) poly(Voltage, 3)1 poly(Voltage, 3)2 poly(Voltage, 3)3

bobyqa 0.05822789 593.8340 -174.6126 57.15901

Nelder_Mead 0.05822787 593.8340 -174.6126 57.15902

nlminbw 0.05822787 593.8340 -174.6126 57.15902

nmkbw 0.05841966 593.7804 -174.4999 57.17107

optimx.L-BFGS-B 0.05822845 593.8336 -174.6116 57.16183

nloptwrap.NLOPT_LN_NELDERMEAD 0.05823870 593.8330 -174.6076 57.16039

nloptwrap.NLOPT_LN_BOBYQA 0.05823870 593.8330 -174.6076 57.16039

> ss$ llik ## log-likelihoods

bobyqa Nelder_Mead nlminbw nmkbw optimx.L-BFGS-B

-8125.125 -8125.125 -8125.125 -8129.827 -8125.204

nloptwrap.NLOPT_LN_NELDERMEAD nloptwrap.NLOPT_LN_BOBYQA

-8125.137

> ss$ sdcor ## SDs and correlations

Serial_number.(Intercept) Serial_number.poly(Voltage, 2)1.(Intercept) Serial_number.poly(Voltage, 2)2.(Intercept)

bobyqa 0.1350049 46.09013 44.01631

Nelder_Mead 0.1350064 46.09104 44.01705

nlminbw 0.1350065 46.09106 44.01707

nmkbw 0.1347214 46.11336 43.81219

optimx.L-BFGS-B 0.1356576 46.32849 44.27171

nloptwrap.NLOPT_LN_NELDERMEAD 0.1347638 45.97995 43.91054

nloptwrap.NLOPT_LN_BOBYQA 0.1347638 45.97995 43.91054

Serial_number.poly(Voltage, 2)1 Serial_number.poly(Voltage, 2)2.poly(Voltage, 2)1 Serial_number.poly(Voltage, 2)2

bobyqa -0.7665898 0.9042387 -0.9608662

Nelder_Mead -0.7665981 0.9042424 -0.9608680

nlminbw -0.7665980 0.9042425 -0.9608680

nmkbw -0.7658163 0.9076551 -0.9649999

optimx.L-BFGS-B -0.7713801 0.9067725 -0.9617129

nloptwrap.NLOPT_LN_NELDERMEAD -0.7645748 0.9034336 -0.9606020

nloptwrap.NLOPT_LN_BOBYQA -0.7645748 0.9034336 -0.9606020

sigma

bobyqa 0.2582156

Nelder_Mead 0.2582156

nlminbw 0.2582156

nmkbw 0.2584714

optimx.L-BFGS-B 0.2582244

nloptwrap.NLOPT_LN_NELDERMEAD 0.2582207

nloptwrap.NLOPT_LN_BOBYQA 0.2582207

> ss$ theta ## Cholesky factors

Serial_number.(Intercept) Serial_number.poly(Voltage, 2)1.(Intercept) Serial_number.poly(Voltage, 2)2.(Intercept)

bobyqa 0.5228377 -136.8323 154.1396

Nelder_Mead 0.5228438 -136.8364 154.1428

nlminbw 0.5228439 -136.8365 154.1429

nmkbw 0.5212237 -136.6278 153.8521

optimx.L-BFGS-B 0.5253478 -138.3947 155.4631

nloptwrap.NLOPT_LN_NELDERMEAD 0.5218936 -136.1436 153.6293

nloptwrap.NLOPT_LN_BOBYQA 0.5218936 -136.1436 153.6293

Serial_number.poly(Voltage, 2)1 Serial_number.poly(Voltage, 2)2.poly(Voltage, 2)1 Serial_number.poly(Voltage, 2)2

bobyqa 114.6181 -71.06063 1.578418e+01

Nelder_Mead 114.6186 -71.06067 1.578354e+01

nlminbw 114.6187 -71.06067 1.578351e+01

nmkbw 114.7270 -71.14411 3.440466e-42

optimx.L-BFGS-B 114.1731 -70.65227 1.527854e+01

nloptwrap.NLOPT_LN_NELDERMEAD 114.7688 -71.19817 1.568481e+01

nloptwrap.NLOPT_LN_BOBYQA 114.7688 -71.19817 1.568481e+01

> ss$ which.OK ## which fits worked

bobyqa Nelder_Mead nlminbw nmkbw optimx.L-BFGS-B

TRUE TRUE TRUE TRUE TRUE

nloptwrap.NLOPT_LN_NELDERMEAD nloptwrap.NLOPT_LN_BOBYQA

TRUE TRUE

Due to users's coment I add the following:

> bam(log(log(Amplification)) ~ s(Voltage) + s(Serial_number, bs="re") + s(Voltage, Serial_number, bs="re"), data=APD, discrete = TRUE)

Family: gaussian

Link function: identity

Formula:

log(log(Amplification)) ~ s(Voltage) + s(Serial_number, bs = "re") +

s(Voltage, Serial_number, bs = "re")

Estimated degrees of freedom:

9 993 987 total = 1990.18

fREML score: -226.8182

> summary(bam(log(log(Amplification)) ~ s(Voltage) + s(Serial_number, bs="re") + s(Voltage, Serial_number, bs="re"), data=APD, discrete = TRUE))

Family: gaussian

Link function: identity

Formula:

log(log(Amplification)) ~ s(Voltage) + s(Serial_number, bs = "re") +

s(Voltage, Serial_number, bs = "re")

Parametric coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.11500 0.01896 6.066 1.31e-09 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Approximate significance of smooth terms:

edf Ref.df F p-value

s(Voltage) 8.998 9 89229 <2e-16 ***

s(Serial_number) 993.441 1019 55241 <2e-16 ***

s(Voltage,Serial_number) 986.741 1019 36278 <2e-16 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

R-sq.(adj) = 0.989 Deviance explained = 99%

fREML = -226.82 Scale est. = 0.051396 n = 76219

On https://uploadfiles.io/n7h9z you can download the r script and data.

Update

(some plots concerning the gam model):

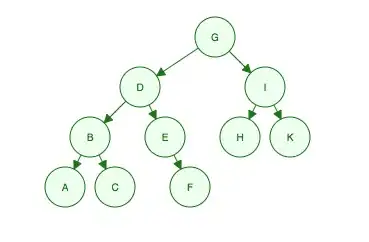

Here are all measurement data points transformed double-logarithmically:

The physical behaviour of the device is at least exponentially and even almost double-exponentially (as I found in a book). By transforming them double-logarithmically the almost behave then linearly. A polynomial of degree described the data already well but a polynomial degree of three did it better, though. I guess this can also be seen on the plot why that.

Some additional plots (I'm not used to GAMs so I just add them):

You can download the data from the link: https://uploadfiles.io/n7h9z