I'd like to avoid mysqldump since that outputs in a form that is only convenient for mysql to read. CSV seems more universal (one file per table is fine). But if there are advantages to mysqldump, I'm all ears. Also, I'd like something I can run from the command line (linux). If that's a mysql script, pointers to how to make such a thing would be helpful.

-

1possible duplicate of [How to output MySQL query results in csv format?](http://stackoverflow.com/questions/356578/how-to-output-mysql-query-results-in-csv-format) – Ben Aug 10 '14 at 15:54

12 Answers

If you can cope with table-at-a-time, and your data is not binary, use the -B option to the mysql command. With this option it'll generate TSV (tab separated) files which can import into Excel, etc, quite easily:

% echo 'SELECT * FROM table' | mysql -B -uxxx -pyyy database

Alternatively, if you've got direct access to the server's file system, use SELECT INTO OUTFILE which can generate real CSV files:

SELECT * INTO OUTFILE 'table.csv'

FIELDS TERMINATED BY ',' OPTIONALLY ENCLOSED BY '"'

LINES TERMINATED BY '\n'

FROM table

- 334,560

- 70

- 407

- 495

-

thanks! your second "table" should be "database", right? no option for CSV instead of TSV that you know of? – dreeves Jan 21 '09 at 23:24

-

duh - yes, it should have read 'database'. No, there's no option for CSV, this is the best I know of without using MySQL's built-in 'select into outfile', which _can_ do CSV, but writes the files on the server, not the client. – Alnitak Jan 21 '09 at 23:26

-

-

11Note that when a relative path (or simply a filename) is given, the file will appear in your MYSQL directory, and not your current shell directory (i.e. where `\. file.sql` reads from). As such, depending on your install you will probably find it at `/var/lib/mysql/[db_name]/table.csv` – Mala Jan 25 '16 at 20:09

In MySQL itself, you can specify CSV output like:

SELECT order_id,product_name,qty

FROM orders

INTO OUTFILE '/tmp/orders.csv'

FIELDS TERMINATED BY ','

ENCLOSED BY '"'

LINES TERMINATED BY '\n'

From http://www.tech-recipes.com/rx/1475/save-mysql-query-results-into-a-text-or-csv-file/

- 179,021

- 58

- 319

- 408

-

2I cant seem to find the dumped file on the directory i specified, why is that? – JCm Dec 18 '15 at 06:25

-

You can dump a whole database in one go with mysqldump's --tab option. You supply a directory path and it creates one .sql file with the CREATE TABLE DROP IF EXISTS syntax and a .txt file with the contents, tab separated. To create comma separated files you could use the following:

mysqldump --password --fields-optionally-enclosed-by='"' --fields-terminated-by=',' --tab /tmp/path_to_dump/ database_name

That path needs to be writable by both the mysql user and the user running the command, so for simplicity I recommend chmod 777 /tmp/path_to_dump/ first.

-

1I kept coming back to this answer, it's definitely the best way to export all the tables to a delimited file. – joevallender May 06 '17 at 01:52

-

1

-

As root: `GRANT FILE ON *.* TO 'user1'@'localhost';` - https://stackoverflow.com/a/15014385/1707015. In my case I had to take `/var/lib/mysql-files/` (instead of `/tmp/`), set the user to mysql:mysql and set the rights to 777 - https://stackoverflow.com/a/32737616/1707015. – qräbnö Aug 15 '20 at 13:02

The select into outfile option wouldn't work for me but the below roundabout way of piping tab-delimited file through SED did:

mysql -uusername -ppassword -e "SELECT * from tablename" dbname | sed 's/\t/","/g;s/^/"/;s/$/"/' > /path/to/file/filename.csv

- 331

- 5

- 15

-

1This command resulted in the letter `t` being replaced with `", "` in the output file. Not exactly what I was after. – BrennanR Feb 10 '17 at 15:57

-

Here is the simplest command for it

mysql -h<hostname> -u<username> -p<password> -e 'select * from databaseName.tableNaame' | sed 's/\t/,/g' > output.csv

If there is a comma in the column value then we can generate .tsv instead of .csv with the following command

mysql -h<hostname> -u<username> -p<password> -e 'select * from databaseName.tableNaame' > output.csv

- 9,291

- 3

- 58

- 40

-

For CSV: it works where i just need comma. `mysql -hlocalhost -uroot -e 'select * from databaseName.tableNaame' | sed 's/\t/,/g' > /tmp/output.csv` ` – Oct 23 '19 at 06:51

You can use below script to get the output to csv files. One file per table with headers.

for tn in `mysql --batch --skip-page --skip-column-name --raw -uuser -ppassword -e"show tables from mydb"`

do

mysql -uuser -ppassword mydb -B -e "select * from \`$tn\`;" | sed 's/\t/","/g;s/^/"/;s/$/"/;s/\n//g' > $tn.csv

done

user is your user name, password is the password if you don't want to keep typing the password for each table and mydb is the database name.

Explanation of the script: The first expression in sed, will replace the tabs with "," so you have fields enclosed in double quotes and separated by commas. The second one insert double quote in the beginning and the third one insert double quote at the end. And the final one takes care of the \n.

- 31

- 3

-

This mostly got me where I needed to go but was surprised when the letter "t" was getting replaced with commas: https://stackoverflow.com/a/2610121/8400969 – Michael Roswell May 16 '19 at 22:39

-

And this should say `--skip-column-names` in my version of mysql, as well – Michael Roswell May 16 '19 at 22:42

If you really need a "Backup" then you also need database schema, like table definitions, view definitions, store procedures and so on. A backup of a database isn't just the data.

The value of the mysqldump format for backup is specifically that it is very EASY to use it to restore mysql databases. A backup that isn't easily restored is far less useful. If you are looking for a method to reliably backup mysql data to so you can restore to a mysql server then I think you should stick with the mysqldump tool.

Mysql is free and runs on many different platforms. Setting up a new mysql server that I can restore to is simple. I am not at all worried about not being able to setup mysql so I can do a restore.

I would be far more worried about a custom backup/restore based on a fragile format like csv/tsv failing. Are you sure that all your quotes, commas, or tabs that are in your data would get escaped correctly and then parsed correctly by your restore tool?

If you are looking for a method to extract the data then see several in the other answers.

- 37,543

- 7

- 45

- 61

-

thanks, very helpful! you convinced me. i do have other reasons to want a quick way to get a csv dump via a command line command though. i'm holding out for that for the "accepted answer". (tho i could probably piece it together at this point from the current answers, i guess w/ a mysql script). – dreeves Jan 22 '09 at 04:50

-

I can see lots of value in having both a backup using the native method and also extracts for other purposes. – Zoredache Jan 22 '09 at 08:06

-

mk-parallel-restore can restore a CSV backup created with mk-parallel dump, so you can get the best of both worlds. In fact, mk-parallel-restore does a better job by ensuring any triggers you have defined are restored last. – Paul Dixon Jan 22 '09 at 10:02

If you want to dump the entire db as csv

#!/bin/bash

host=hostname

uname=username

pass=password

port=portnr

db=db_name

s3_url=s3://buckera/db_dump/

DATE=`date +%Y%m%d`

rm -rf $DATE

echo 'show tables' | mysql -B -h${host} -u${uname} -p${pass} -P${port} ${db} > tables.txt

awk 'NR>1' tables.txt > tables_new.txt

while IFS= read -r line

do

mkdir -p $DATE/$line

echo "select * from $line" | mysql -B -h"${host}" -u"${uname}" -p"${pass}" -P"${port}" "${db}" > $DATE/$line/dump.tsv

done < tables_new.txt

touch $DATE/$DATE.fin

rm -rf tables_new.txt tables.txt

- 69

- 8

Check out mk-parallel-dump which is part of the ever-useful maatkit suite of tools. This can dump comma-separated files with the --csv option.

This can do your whole db without specifying individual tables, and you can specify groups of tables in a backupset table.

Note that it also dumps table definitions, views and triggers into separate files. In addition providing a complete backup in a more universally accessible form, it also immediately restorable with mk-parallel-restore

- 295,876

- 54

- 310

- 348

-

This may not be the ideal _backup_ but is completely awesome for SHARING. Thank you! – atroon Nov 07 '11 at 15:25

-

`maatkit` seems to be part of the `percona toolkit` now, but I cannot find corresponding tools. – sjas Jan 23 '17 at 11:26

Two line PowerShell answer:

# Store in variable

$Global:csv = (mysql -uroot -p -hlocalhost -Ddatabase_name -B -e "SELECT * FROM some_table") `

| ConvertFrom-Csv -Delimiter "`t"

# Out to csv

$Global:csv | Export-Csv "C:\temp\file.csv" -NoTypeInformation

Boom-bata-boom

-D = the name of your database

-e = query

-B = tab-delimited

- 7,775

- 7

- 59

- 82

There's a slightly simpler way to get all the tables into tab delimited fast:

#!/bin/bash

tablenames=$(mysql your_database -e "show tables;" -B |sed "1d")

IFS=$'\n'

tables=($tablenames)

for table in ${tables[@]}; do

mysql your_database -e "select * from ${table}" -B > "${table}.tsv"

done

- 2,554

- 4

- 22

- 30

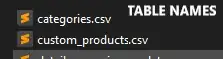

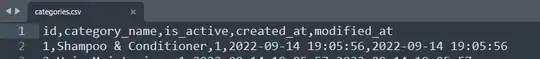

Here's a basic python script that does the work! You can also choose to export only the headers (column names) or headers & data both.

Just change the database credentials and run the script. It will output all the data to the output folder.

To run the script -

- Run: pip install mysql-connector-python

- Change database credentials in the "INPUT" section

- Run: python filename.py

import mysql.connector

from pathlib import Path

import csv

#========INPUT===========

databaseHost=""

databaseUsername=""

databasePassword=""

databaseName=""

outputDirectory="./WITH-DATA/"

exportTableData=True #MAKING THIS FIELD FALSE WILL STORE ONLY THE TABLE HEADERS (COLUMN NAMES) IN THE CSV FILE

#========INPUT END===========

Path(outputDirectory).mkdir(parents=True, exist_ok=True)

mydb = mysql.connector.connect(

host=databaseHost,

user=databaseUsername,

password=databasePassword

)

mycursor = mydb.cursor()

mycursor.execute("USE "+databaseName)

mycursor.execute("SHOW TABLES")

tables = mycursor.fetchall()

tableNames=[table[0] for table in tables]

print("================================")

print("Total number of tables: "+ str(len(tableNames)))

print(tableNames)

print("================================")

for tableName in tableNames:

print("================================")

print("Processing: "+ str(tableName))

mydb = mysql.connector.connect(

host=databaseHost,

user=databaseUsername,

password=databasePassword

)

mycursor = mydb.cursor()

mycursor.execute("USE "+databaseName)

if exportTableData:

mycursor.execute("SELECT * FROM "+tableName)

else:

mycursor.execute("SELECT * FROM "+tableName+" LIMIT 1")

print(mycursor.column_names)

with open(outputDirectory+tableName+".csv", 'w', newline='') as csvfile:

csvwriter = csv.writer(csvfile)

csvwriter.writerow(mycursor.column_names)

if exportTableData:

myresult = mycursor.fetchall()

csvwriter.writerows(myresult)

- 99

- 2

- 3