I have a JSON file with the below format

{"sku-1":{"att-a":"att-a-7","att-b":"att-b-3","att-c":"att-c-10","att-d":"att-d-10","att-e":"att-e-15","att-f":"att-f-11","att-g":"att-g-2","att-h":"att-h-7","att-i":"att-i-5","att-j":"att-j-14"},"sku-2":{"att-a":"att-a-9","att-b":"att-b-7","att-c":"att-c-12","att-d":"att-d-4","att-e":"att-e-10","att-f":"att-f-4","att-g":"att-g-13","att-h":"att-h-4","att-i":"att-i-1","att-j":"att-j-13"},"sku-3":{"att-a":"att-a-10","att-b":"att-b-6","att-c":"att-c-1","att-d":"att-d-1","att-e":"att-e-13","att-f":"att-f-12","att-g":"att-g-9","att-h":"att-h-6","att-i":"att-i-7","att-j":"att-j-4"}}

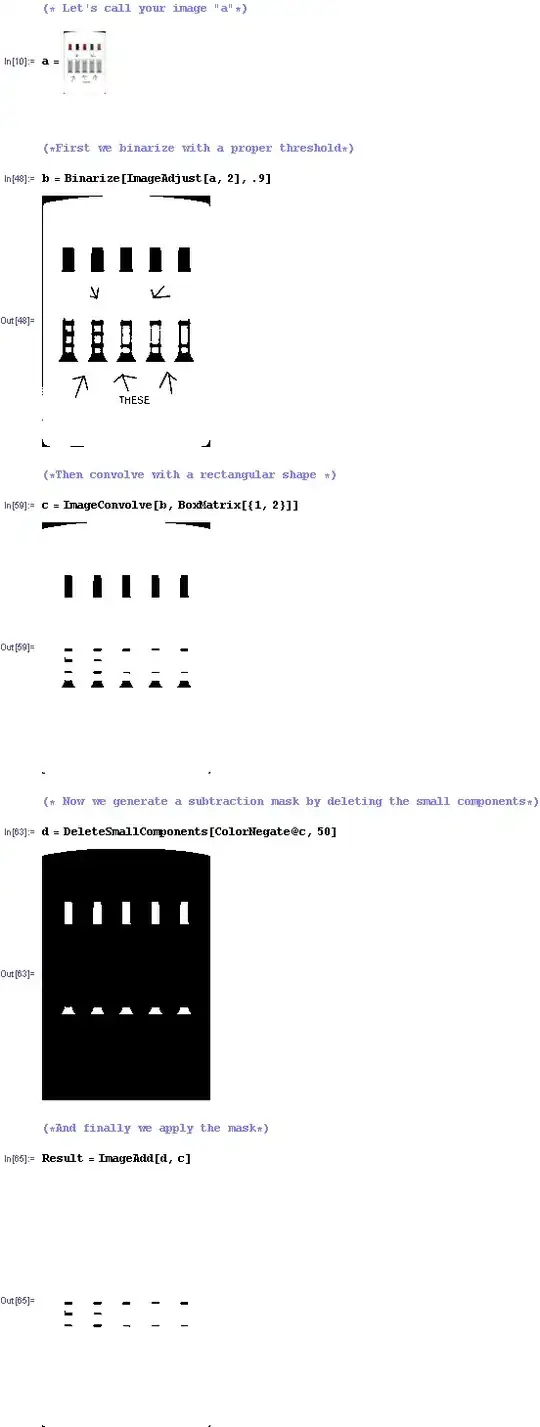

I need to read it into Spark Dataframe with the below new structure,

I also tried to read it as the below

val schema = (new StructType)

.add("SKUNAME", (new StructType)

.add("att-a", StringType)

.add("att-b", StringType)

.add("att-c", StringType)

.add("att-d", StringType)

.add("att-e", StringType)

.add("att-f", StringType)

.add("att-g", StringType)

.add("att-h", StringType)

.add("att-i", StringType)

.add("att-j", StringType))

val recommendationInputDf = sparkSession.read.schema(schema).json(recommendationsPath)

The output from my code above is below

Schema

root

|-- SKUNAME: struct (nullable = true)

| |-- att-a: string (nullable = true)

| |-- att-b: string (nullable = true)

| |-- att-c: string (nullable = true)

| |-- att-d: string (nullable = true)

| |-- att-e: string (nullable = true)

| |-- att-f: string (nullable = true)

| |-- att-g: string (nullable = true)

| |-- att-h: string (nullable = true)

| |-- att-i: string (nullable = true)

| |-- att-j: string (nullable = true)

Data

+-------+

|SKUNAME|

+-------+

| null|

+-------+

I also check other question similar like (Spark: Transpose DataFrame Without Aggregating) & (transpose-dataframe-using-spark-scala-without-using-pivot-function) but can't get the same output

From the comments I already checked the below solution proposed,

def toLong(df: DataFrame, by: Seq[String]): DataFrame = {

val (cols, types) = df.dtypes.filter { case (c, _) => !by.contains(c) }.unzip

require(types.distinct.size == 1)

val kvs = explode(array(

cols.map(c => struct(lit(c).alias("key"), col(c).alias("val"))): _*))

val byExprs = by.map(col(_))

import sparkSession.sqlContext.implicits._

df

.select(byExprs :+ kvs.alias("_kvs"): _*)

.select(byExprs ++ Seq($"_kvs.key", $"_kvs.val"): _*)

}

toLong(recommendationInputDf, Seq("sku-1")).show(12, false)

But the output is as below,

+--------------------------------------------------------------------------------------+-----+-------------------------------------------------------------------------------------+

|sku-1 |key |val |

+--------------------------------------------------------------------------------------+-----+-------------------------------------------------------------------------------------+

|[att-a-7,att-b-3,att-c-10,att-d-10,att-e-15,att-f-11,att-g-2,att-h-7,att-i-5,att-j-14]|sku-2|[att-a-9,att-b-7,att-c-12,att-d-4,att-e-10,att-f-4,att-g-13,att-h-4,att-i-1,att-j-13]|

|[att-a-7,att-b-3,att-c-10,att-d-10,att-e-15,att-f-11,att-g-2,att-h-7,att-i-5,att-j-14]|sku-3|[att-a-10,att-b-6,att-c-1,att-d-1,att-e-13,att-f-12,att-g-9,att-h-6,att-i-7,att-j-4] |

+--------------------------------------------------------------------------------------+-----+-------------------------------------------------------------------------------------+