Spark 2.0.1

I have some rdd:

class MyClass{ }

JavaRDD<MyClass> rdd = //new hadoop api file;

Comparator<MyClass> comp;

//create comparator and do some map on the rdd

rdd.mapToPait(l -> new Tuple2<>(l , ""))

.sortByKey(comp)

.mapToPair(l -> new Tuple2<>(l._1, NullWritable.get())

.saveAsNewAPIHadoopFile(somePath, AvroKey.class, NullWritable.class, SomeOutputFormat.class, hadoopConfiguration);

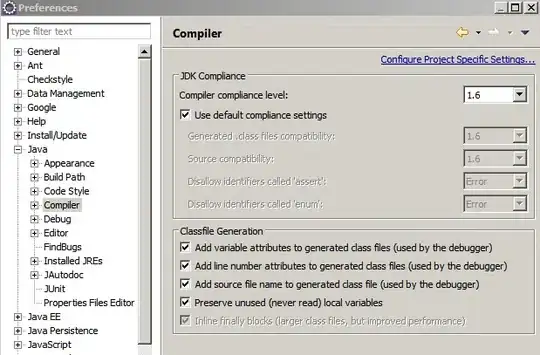

And this all works very unusual to me. The application consists of 2 jobs which looks as:

The strange thing here is on Job 0 we do newAPIHadoopFile -> map -> map -> sortByKey and that ok.

But on the Job 1 we do the same (Job1::stage1 is not skipping) and then sort and then save. Stage 2 takes lots of time. Why is that happening? Is there a way to fix the execution plan somewhere to more optimal?