I was trying to edit tf.stack op's backward gradient calculation mechanism with tf.RegisterGradientandtf.gradient_override_map, here are my codes:

import tensorflow as tf

class SynthGradBuilder(object):

def __init__(self):

self.num_calls = 0

def __call__(self, x, l=1.0):

op_name = "SynthGrad%d" % self.num_calls

@tf.RegisterGradient(op_name)

def _grad_synth(op, grad):

return grad[0]

g = tf.get_default_graph()

with g.gradient_override_map({"stack": op_name}):

y = tf.stack([x,x])

self.num_calls += 1

return y

GradSys = SynthGradBuilder()

in another script, I wrote

import tensorflow as tf

from gradient_synthesizer import GradSys

x = tf.Variable([1,2])

y = GradSys(x, l=1)

z = tf.stack([x,x])

grad = tf.gradients(y, x, grad_ys=[[tf.convert_to_tensor([3, 4]),

tf.convert_to_tensor([6, 8])]])

grad_stack = tf.gradients(z, x, grad_ys=[[tf.convert_to_tensor([3, 4]),

tf.convert_to_tensor([6, 8])]])

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

print "grad bp: ", sess.run(grad)

print "grad_stack: ", sess.run(grad_stack)

print "y: ", sess.run(y)

The expected output should be:

grad bp: [3,4];

grad_stack: [3+6, 4+8] = [9, 12];

y: [[1,2], [1,2]];

What I actually got from the code was:

indicating that tf.stack's backward gradients were not replaced at all, which was opposite to my expectation.

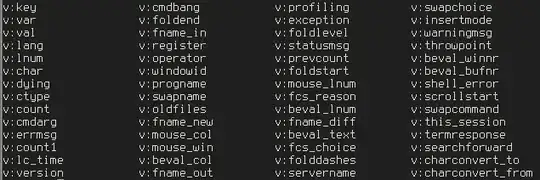

I'm not sure if such discrepancy was brought by falsely using "stack" as the type string of operation tf.stack, I carried out an experiment in the following way:

The first item describing tensor y, the "stack:0" suggests op tf.stack 's registered name is "stack", which is also its type string. So it seems it is not "stack"'s fault.

I am at a loss to figure out the causes of my codes' problem. I wonder if anyone can help me with that.