I'm using the DQN algorithm to train an agent in my environment, that looks like this:

- Agent is controlling a car by picking discrete actions (left, right, up, down)

- The goal is to drive at a desired speed without crashing into other cars

- The state contains the velocities and positions of the agent's car and the surrounding cars

- Rewards: -100 for crashing into other cars, positive reward according to the absolute difference to the desired speed (+50 if driving at desired speed)

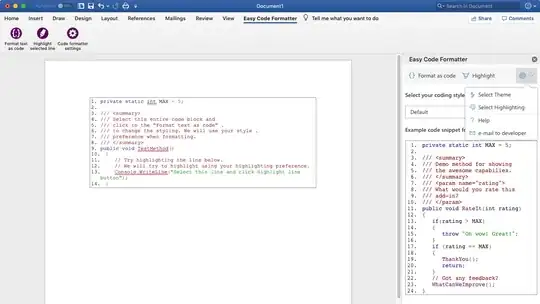

I have already adjusted some hyperparameters (network architecture, exploration, learning rate) which gave me some descent results, but still not as good as it should/could be. The rewards per epiode are increasing during training. The Q-values are converging, too (see figure 1). However, for all different settings of hyperparameter the Q-loss is not converging (see figure 2). I assume, that the lacking convergence of the Q-loss might be the limiting factor for better results.

Q-value of one discrete action durnig training

I'm using a target network which is updated every 20k timesteps. The Q-loss is calculated as MSE.

Do you have ideas why the Q-loss is not converging? Does the Q-Loss have to converge for DQN algorithm? I'm wondering, why Q-loss is not discussed in most of the papers.