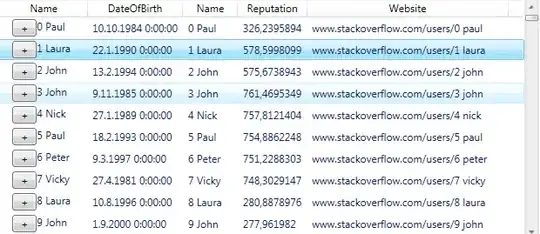

For a project, I have been attempting to transform the depth map given by libfreenect (a 480 by 640 matrix with depth values 0-255) into more usable (x,y,z) coordinates.

I originally assumed that the depth variable d at each pixel represented the Euclidean distance between the sensor and point found. By representing the camera as a point, the matrix as a virtual image plane, and following the vectors from camera to pixels on the plane the distance d, I reconstructed what I thought were the actual coordinates. (Each point is located at distance d along the ray cast through the corresponding pixel). As is evident below in Figure 1, the reconstructed room map (shown from above) is distorted.

Figure 1: d is Euclidean Distance

If I instead assume d represents the forward distance from camera to each point, the result is shown below, in Figure 2. Note the triangular shape, since measured points are located along rays projected from the robot's position. The x and y coordinates are of course scaled based on the depth, and z is the depth value d.

Figure 2: d is depth from camera, or z coordinate

For reference, here is the map generated if I do not scale the x and y coordinates by the depth, assume d is the z coordinate, and plot(x,y,z). Note the rectangular shape of the room map, since points are not assumed to be located along rays cast from the sensor.

Figure 3: Original Image

Based on the above images, it appears that either Figure 2 or 3 could be correct. Does anyone know what preprocessing libfreenect does on captured data points? I have looked online, but haven't found documentation regarding how depth is preprocessed before being stored in this matrix. Thanks for any help in advance, and I would be glad to supply any additional required information.