Loaded around 3K objects (files) to S3. There is an event trigger an each file that is loaded on to that S3 bucket.

Lambda is receiving event trigger only for around 300 objects. If I retry (move back from S3 and put it back to S3) it generates event for another 400 objects rest of the events did not even reach lambda.

What am I missing here, and how can I scale for any number of objects created?

var async = require('async');

var aws = require('aws-sdk');

var s3 = new aws.S3();

var kinesis = new aws.Kinesis();

var sns = new aws.SNS();

var config = require('./config.js');

var logError = function(errormsg) {

sns.publish({

TopicArn: config.TopicArn,

Message: errormsg

}, function(err, data) {

if (err) {

console.log(errormsg);

}

});

};

exports.handler = function(event, context, callback) {

var readS3andSendtoKinesis = function(record, index, cb) {

var params = {

Bucket: record.s3.bucket.name,

Key: record.s3.object.key

};

console.log('Received File: ' + record.s3.object.key);

s3.getObject(params, function(err, data) {

if (!err) {

var kinesisParams = {

Data: data.Body.toString('utf8'),

PartitionKey: config.PartitionKey,

StreamName: config.StreamName

};

kinesis.putRecord(kinesisParams, function(err, data) {

if (err) {

// Handle Kinesis Failures

logError(JSON.stringify(err, null, 2));

}

cb(null, 'done');

});

} else {

// Handle S3 Failures

logError(JSON.stringify(err, null, 2));

cb(null, 'done');

}

});

};

async.eachOfLimit(event.Records, 1, readS3andSendtoKinesis, function(err) {

callback(null, 'Done');

});

};

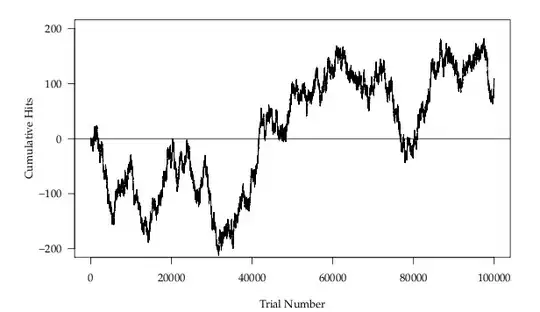

Since everyone recommended to look at cloudwatch, sharing the cloudwatch metrics here for the associated lambda,