Note: I already know that Python uses approximations for floating point numbers. However their behaviour is inconsistent even after a subtraction (which should not increase the representation error, should it?). Furthermore, I would like to know how to fix the problem.

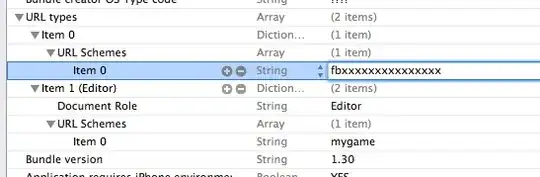

Python sometimes seems to use an approximation for the actual value of a float, as you can see in the 5th and 6th element of the this_spectrum variable in the image. All values were read from a text file and should have only 2 decimals.

When you print them or use them in a Python calculation, they behave as if they have the intended value:

When you print them or use them in a Python calculation, they behave as if they have the intended value:

In: this_spectrum[4] == 1716.31

Out: True

However, after using them in a Cython script which calculates all pairwise distances between elements (i.e. performs a simple subtraction of values) and stores them in alphaMatrix, it seems the Python approximations were used instead of the actual values:

In: alphaMatrix[0][0]

Out: 161.08

In: alphaMatrix[0][0] == 161.08

Out: False

In: alphaMatrix[0][0] == 161.07999999999996

Out: True

Why is this happening, and what would be the proper way to fix this problem? Is this really a Cython problem/bug/feature(?) or is there something else going on?