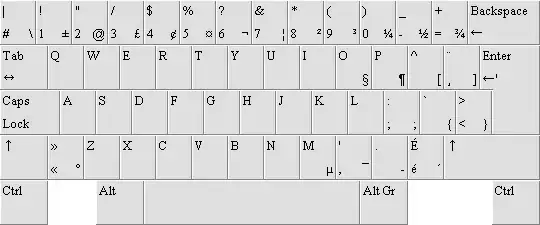

With this code I created some bounding boxes around the characters in the below image:

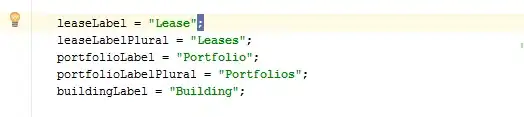

import csv

import cv2

from pytesseract import pytesseract as pt

pt.run_tesseract('bb.png', 'output', lang=None, boxes=True, config="hocr")

# To read the coordinates

boxes = []

with open('output.box', 'rt') as f:

reader = csv.reader(f, delimiter=' ')

for row in reader:

if len(row) == 6:

boxes.append(row)

# Draw the bounding box

img = cv2.imread('bb.png')

h, w, _ = img.shape

for b in boxes:

img = cv2.rectangle(img, (int(b[1]), h-int(b[2])), (int(b[3]), h-int(b[4])), (0, 255, 0), 2)

cv2.imshow('output', img)

cv2.waitKey(0)

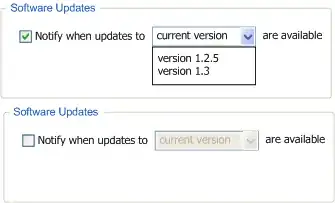

OUTPUT

What I would like to have is this:

The program should draw a perpendicular line on the X axis of the bounding box (only for the first and third text-area. The one in the middle must not be interested in the process).

The goal is this (and of there is another way to achieve it, please explain): once I have this two lines (or, better, group of coordinates), using a mask to cover this two areas.

Is it possible ?

Source image:

CSV as requested: print(boxes)

[['l', '56', '328', '63', '365', '0'], ['i', '69', '328', '76', '365', '0'], ['n', '81', '328', '104', '354', '0'], ['e', '108', '328', '130', '354', '0'], ['1', '147', '328', '161', '362', '0'], ['m', '102', '193', '151', '227', '0'], ['i', '158', '193', '167', '242', '0'], ['d', '173', '192', '204', '242', '0'], ['d', '209', '192', '240', '242', '0'], ['l', '247', '193', '256', '242', '0'], ['e', '262', '192', '292', '227', '0'], ['t', '310', '192', '331', '235', '0'], ['e', '334', '192', '364', '227', '0'], ['x', '367', '193', '398', '227', '0'], ['t', '399', '192', '420', '235', '0'], ['-', '440', '209', '458', '216', '0'], ['n', '481', '193', '511', '227', '0'], ['o', '516', '192', '548', '227', '0'], ['n', '553', '193', '583', '227', '0'], ['t', '602', '192', '623', '235', '0'], ['o', '626', '192', '658', '227', '0'], ['t', '676', '192', '697', '235', '0'], ['o', '700', '192', '732', '227', '0'], ['u', '737', '192', '767', '227', '0'], ['c', '772', '192', '802', '227', '0'], ['h', '806', '193', '836', '242', '0'], ['l', '597', '49', '604', '86', '0'], ['i', '610', '49', '617', '86', '0'], ['n', '622', '49', '645', '75', '0'], ['e', '649', '49', '671', '75', '0'], ['2', '686', '49', '710', '83', '0']]

EDIT:

To use zindarod answer, you need tesserocr. Installation through pip install tesserocr can give you various errors.

I found wheel version of it (after hours trying to install and solve errors, see my comment below the answer...): here you can find/download it.

Hope this helps..