I'm trying to vectorize some text with sklearn CountVectorizer. After, I want to look at features, which generate vectorizer. But instead, I got a list of codes, not words. What does this mean and how to deal with the problem? Here is my code:

vectorizer = CountVectorizer(min_df=1, stop_words='english')

X = vectorizer.fit_transform(df['message_encoding'])

vectorizer.get_feature_names()

And I got the following output:

[u'00',

u'000',

u'0000',

u'00000',

u'000000000000000000',

u'00001',

u'000017',

u'00001_copy_1',

u'00002',

u'000044392000001',

u'0001',

u'00012',

u'0004',

u'0005',

u'00077d3',

and so on.

I need real feature names (words), not these codes. Can anybody help me please?

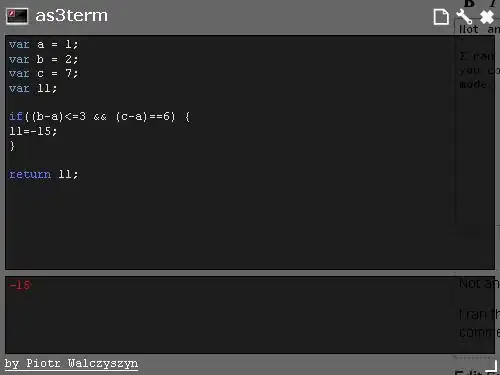

UPDATE: I managed to deal with this problem, but now when I want to look at my words I see many words that actually are not words, but senseless sets of letters (see screenshot attached). Anybody knows how to filter this words before I use CountVectorizer?