When I try to run a DAG in Airflow 1.8.0 I find that it takes a lot of time between the time of completion predecessor task and the time at which the successor task is picked up for execution (usually greater the execution times of individual tasks). The same is the scenario for Sequential, Local and Celery Executors. Is there a way to lessen the overhead time mentioned? (like any parameters in airflow.cfg that can speed up the DAG execution?)

Gantt chart has been added for reference:

- 12,088

- 6

- 56

- 76

- 460

- 1

- 5

- 13

-

1what's your `scheduler_heartbeat_sec` in your configuration? Maybe you can try to reduce it. – Chengzhi Jan 30 '18 at 22:13

-

Just curious — do you remember which executor you used for the data in the Gantt chart shown? I would expect LocalExecutor vs CeleryExecutor to look a bit different, at least when multiple nodes are involved. – Taylor D. Edmiston Jul 24 '18 at 22:11

-

I'm not sure why yet, but with 1.10.7 (and probably 1.10.7+) with the `LocalExecutor`, the time between tasks corresponds roughly to the number of seconds in the `min_file_process_interval` setting. Maybe try shortening that setting? – Marco May 06 '20 at 13:51

2 Answers

As Nick said, Airflow is not a real-time tool. Tasks are scheduled and executed ASAP, but the next Task will never run immediately after the last one.

When you have more than ~100 DAGs with ~3 Tasks in each one or Dags with many Tasks (~100 or more), you have to consider 3 things:

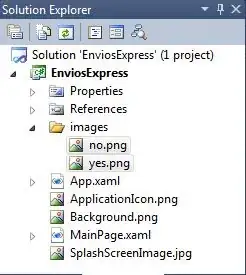

- Increase the number of threads that the DagFileProcessorManager will use to load and execute the Dags (airflow.cfg):

[scheduler]

max_threads = 2

The max_threads controls how many DAGs are picked and executed/terminated (see here).

Increasing this configuration may reduce the time between the Tasks.

- Monitor your Airflow Database to see if it has any bottlenecks. The Airflow database is used to manage and execute processes:

Recently we were suffering with the same problem. The time between Tasks was ~10-15 minutes, we were using PostgreSQL on AWS.

The instance was not using the resources very well; ~20 IOPS, 20% of the memory and ~10% of CPU, but Airflow was very slow.

After looking at the database performance using PgHero, we discovered that even a query using an Index on a small table was spending more than one second.

So we increased the Database size, and Airflow is now running as fast as a rocket. :)

- To get the time Airflow is spending loading Dags, run the command:

airflow list_dags -r

DagBag parsing time: 7.9497220000000075

If the DagBag parsing time is higher than ~5 minutes, it could be an issue.

All of this helped us to run Airflow faster. I really advise you to upgrade to version 1.9 as there are many performance issues that were fixed on this version

BTW, we are using the Airflow master in production, with LocalExecutor and PostgreSQL as the metadata database.

- 1

- 1

- 361

- 3

- 4

-

I hadn't been back to this question in some time... @Marcos- do you know for sure if the multi-process scheduler works now? I believe in 1.7 or 1.8 there was a dead lock scenario and the scheduler would just lockup. Instead we just run multiple `airflow scheduler` with different directories to batch up the dags. – Nick Jul 20 '19 at 21:20

-

Additionally, I wrote a dag caching mechanism which could cache a dag in redis for long-running dag bag imports. Another thing to review is the code for dag generation, and ensuring you don't do anything in it other than define the dag. let the task instances handle other things. – Nick Jul 20 '19 at 21:21

-

3I think something is super weird though: it takes 40 seconds between tasks when running a backfill, even though I have a trivial DagBag that takes like 0.05 seconds to parse. What's Airflow doing for 40 seconds? My scenario: I have some quick jobs that download historical currency rate data, creating one file per day. I want to backfill several years. The task takes 4 seconds to run. Airflow takes 40 seconds between tasks, so the compute in this sequential backfill is mostly wasted time; should take hours, instead takes days! Note that I can't use task parallelism or my IP would be blocked – eraoul Oct 29 '19 at 06:06

-

I would love to see an answer to your questions. We had similar problems with a huge backfill. our dagbag also parses in no time – grackkle Aug 03 '20 at 16:07

-

1I asked this question about 3 years ago. The delay has reduced over the years. I think it will be significantly cut down in Airflow 2 https://www.element61.be/en/resource/whats-changing-airflow-20 – Prasann Aug 05 '20 at 10:19

-

Excellent answer. On Airflow 1.10.2, a combination of the following helped: 1) configuring the settings carefully 2) deleting old task instances and their dag runs from the DB directly, 3) increasing the size of the database. We saw the same relatively low CPU/memory pressure on the DB, but the scheduler was slow to schedule tasks. Bumping up the memory and CPU on the DB really helped. The root cause seems to be locks and waits on those tables, but it can be mitigated to some degree by throwing more resources at it. The correct solution is to upgrade the Airflow version of course. – yegeniy Dec 12 '20 at 06:04

Your Gantt chart shows things in the order of seconds. Airflow is not meant to be a real-time scheduling engine. It deals with things on the order of minutes. If you need things to run faster, you may consider different scheduling tool from airflow. Alternatively you can put all of the work into a single task so you do not suffer from the delays of the scheduler.

- 2,735

- 1

- 29

- 36

-

1

-

1Hi @RobertLugg - unfortunately, I haven't done much research into alternatives. Our org has stuck with airflow for now. There are a number of alternatives, but i think the abstraction level of a job moves around a bit... there is mesos, conductor (Netflix), Luigi... i am not sure which are more real-time. – Nick Jul 20 '19 at 21:18

-

1@RobertLugg Airflow is built on top of Celery. To reduce the time between tasks, you can directly build your ETL Pipeline Platform on top of celery. There is a lot of technical documentation/blogs around this which you should be able to find easily – sudeepgupta90 Feb 17 '20 at 14:27

-

3@sudeepgupta90 - Airflow is NOT built on top of Celery, although it allows for Celery to be used as its task executor. Depending on the complexity of the jobs that OP has in mind, you might be inadvertently suggesting to build a whole new airflow... that is a monumental task. It's much better to find a different framework and use that if the performance of Airflow is not meeting the requirements for the problem at hand. Think about the hundreds of configuration options, the admin UI, the error handling, all taken care of for you. – Nick Feb 18 '20 at 05:32