we are using scikit-learn to find similar image clusters. We want to have an internal API for that but when we import objects from scikit or using it we get an very high number of context switches.

Either of those imports is creating a lot of them:

from sklearn.neighbors import NearestNeighbors

from sklearn.externals.joblib import load

from sklearn.decomposition import PCA

from sklearn.externals import joblib

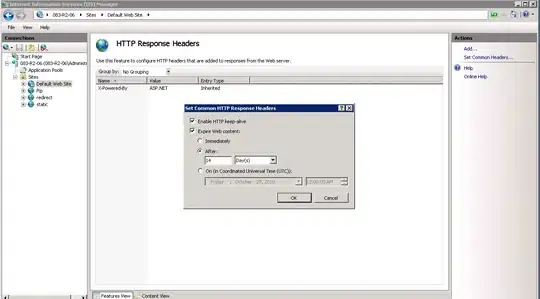

here is the vmstat 1 output during a restart of our program.

We added some sleeps before and after the import and corelated the high number of context switches to those lines.

But also when we are crunching the numbers in our 3 GB NearestNeighbors object we see a big increase in the context switches.

You can definitely spot the 3 queries we send to our API.

Here are the supects for the increase:

def reduce_dimensions(self, dataset):

return self.dim_obj.transform(dataset)

def get_closest_cluster(self, input_data):

indexs_with_distance = self.cluster_obj.radius_neighbors(X=input_data, radius=self.radious, return_distance=True)

return self.get_ordered_indexs(indexs_with_distance)

This happens when we run our setup with docker compose on our laptop, and when we run it on nomad with docker. The web app is written with Flask and served with gunicorn.

Is there any way to tell scikit to more resourceful with those context switches?

Our Admins are concerned that it will degrade all applications that are deployed to the same nodes.

We are using Python 3.6 and scikit-learn 0.19.1