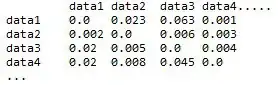

I created the following image (image 3) using a threshold mask (image 2) on image 1. I am trying to convert all the pixels outside of the central image of image 3 (of lungs) to one colour (for example black) using opencv. Basically so that I am left with just the image of the lungs against a uniform background (or even transparent). My problem has been the similarity of the very outer pixels to those inside the lungs on image 3. Is this possible to do using opencv?

-

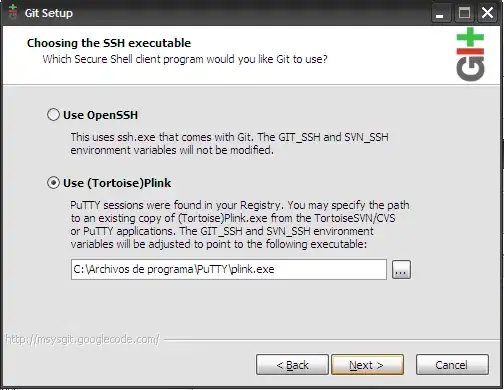

So to be clear you want the third image to look how it looks now, but everything black except the lungs, is that correct? What I would do is use `floodFill()` on your mask from the edge of the image; this will paint everything that's white that touches the border of the image black, so that your mask will only include the inner lungs. Part of my answer [here](https://stackoverflow.com/a/46084597/5087436) includes flood-filling from the edges. If you think this will work for your image I can write a complete answer. – alkasm Nov 25 '17 at 07:01

-

Exactly. It would also be useful to know if all of the background except the lungs could be made transparent. Many thanks – GhostRider Nov 25 '17 at 07:17

-

Alright I made a final edit including the transparency that you'd like. – alkasm Nov 25 '17 at 07:27

1 Answers

Simply floodFill() the mask from the boundaries of the image with black. See the flood fill step in my answer here to see it used in another scenario.

Similarly, you can use floodFill() to find which pixels connect to the edges of the image, which means you can use it to put back the holes in the lungs from thresholding. See my answer here for a different example of this hole-filling process.

I copy and pasted the code straight from the above answers, only modifying the variable names:

import cv2

import numpy as np

img = cv2.imread('img.jpg', 0)

mask = cv2.imread('mask.png', 0)

# flood fill to remove mask at borders of the image

h, w = img.shape[:2]

for row in range(h):

if mask[row, 0] == 255:

cv2.floodFill(mask, None, (0, row), 0)

if mask[row, w-1] == 255:

cv2.floodFill(mask, None, (w-1, row), 0)

for col in range(w):

if mask[0, col] == 255:

cv2.floodFill(mask, None, (col, 0), 0)

if mask[h-1, col] == 255:

cv2.floodFill(mask, None, (col, h-1), 0)

# flood fill background to find inner holes

holes = mask.copy()

cv2.floodFill(holes, None, (0, 0), 255)

# invert holes mask, bitwise or with mask to fill in holes

holes = cv2.bitwise_not(holes)

mask = cv2.bitwise_or(mask, holes)

# display masked image

masked_img = cv2.bitwise_and(img, img, mask=mask)

masked_img_with_alpha = cv2.merge([img, img, img, mask])

cv2.imwrite('masked.png', masked_img)

cv2.imwrite('masked_transparent.png', masked_img_with_alpha)

Edit: As an aside, "transparency" is basically a mask: the values tell you how opaque each pixel is. If the pixel is 0, its totally transparent, if it's 255 (for uint8) then it's completely opaque, if it's in-between then it's partially transparent. So the exact same mask used here at the end could be stacked onto the image to create the fourth alpha channel (you can use cv2.merge or numpy to stack) where it will make every 0 pixel in the mask totally transparent; simply save the image as a png for the transparency. The above code creates an image with alpha transparency as well as an image with a black background.

Here the background looks white because it is transparent, but if you save the image to your system you'll see it actually is transparent. FYI OpenCV actually ignores the alpha channel during imshow() so you'll only see the transparency on saving the image.

Edit: One last note...here your thresholding has removed some bits of the lungs. I've added back in the holes from thresholding that occur inside the lungs but this misses some chunks along the boundary that were removed. If you do contour detection on the mask, you can actually smooth those out a bit as well if it's important. Check out the "Contour Approximation" section on OpenCV's contour features tutorial. Basically it will try to smooth the contour but stick within some certain epsilon distance from the actual contour. This might be useful and is easy to implement, so I figured I'd throw it as a suggestion at the end here.

- 22,094

- 5

- 78

- 94

-

-

Alexander - apologies...I am having a little difficulty seeing where this code is adjusted to create and entirely black background vs an entirely transparent background (both results are very useful and I would like to have the option of selecting which one) – GhostRider Nov 25 '17 at 08:06

-

@GhostRider I edited the code, give it a look. The only differences here are in the last few lines. `cv2.bitwise_and()` will simply mask the image, so that only parts of the mask that are white the image will show through, otherwise it's black. However for transparency, I used `cv2.merge()` to duplicate the grayscale img to a three-channel image, and then stacked a fourth channel which is the alpha channel. This is just the mask, so the parts of the mask that are white will have the normal image, and parts of the mask that are black will be transparent. Hope that helps! – alkasm Nov 25 '17 at 09:04