Recently I implemented a VGG-16 network using both Tensorflow and PyTorch, data set is CIFAR-10. Each picture is 32 * 32 RGB.

I use a 64 batch size in beginning, while I found PyTorch using much less GPU memory than tensorflow. Then I did some experiments and got a figure, which is posted below.

After some researching, I known the tensorflow using BFC algorithm to manage memory. So it's can explain why tensorflow's memory using decreasing or increasing by 2048, 1024, ... MB and sometimes the memory use not increasing when batch size is bigger.

But I am still confused, why the memory use is lower when batch size is 512 than batch size is 384, 448 etc. which has a smaller batch size. The same as when batch size is from 1024 to 1408, and batch size is 2048 to 2688.

Here is my source code:

PyTorch:https://github.com/liupeng3425/tesorflow-vgg/blob/master/vgg-16-pytorch.py

Tensorflow:https://github.com/liupeng3425/tesorflow-vgg/blob/master/vgg-16.py

edit: I have two Titan XP on my computer, OS: Linux Mint 18.2 64-bit.

I determine GPU memory usage with command nvidia-smi.

My code runs on GPU1, which is defined in my code:

os.environ["CUDA_DEVICE_ORDER"] = "PCI_BUS_ID"

os.environ["CUDA_VISIBLE_DEVICES"] = "1"

And I am sure there only one application using GPU1.

GPU memory usage can be determined by the application list below.

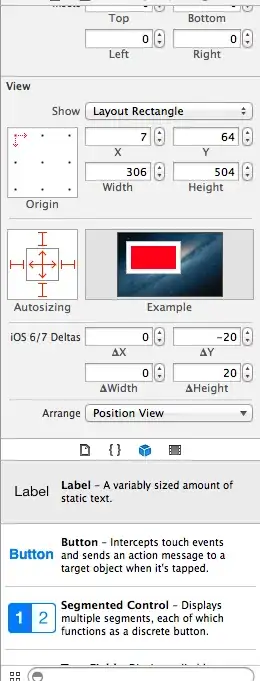

For example, like the posted screen shot below, process name is /usr/bin/python3 and its GPU memory usage is 1563 MiB.