Have a look here, it seems like the exact answer.

Here instead there is my version of the above code fine tuned for text extraction (with masking too).

Below there is the original code from the previous article, "ported" to python 3, opencv 3, added mser and bounding boxes. The main difference with my version is how the grouping distance is defined: mine is text-oriented while the one below is a free geometrical distance.

import sys

import cv2

import numpy as np

def find_if_close(cnt1,cnt2):

row1,row2 = cnt1.shape[0],cnt2.shape[0]

for i in range(row1):

for j in range(row2):

dist = np.linalg.norm(cnt1[i]-cnt2[j])

if abs(dist) < 25: # <-- threshold

return True

elif i==row1-1 and j==row2-1:

return False

img = cv2.imread(sys.argv[1])

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

cv2.imshow('input', img)

ret,thresh = cv2.threshold(gray,127,255,0)

mser=False

if mser:

mser = cv2.MSER_create()

regions = mser.detectRegions(thresh)

hulls = [cv2.convexHull(p.reshape(-1, 1, 2)) for p in regions[0]]

contours = hulls

else:

thresh = cv2.bitwise_not(thresh) # wants black bg

im2,contours,hier = cv2.findContours(thresh,cv2.RETR_EXTERNAL,2)

cv2.drawContours(img, contours, -1, (0,0,255), 1)

cv2.imshow('base contours', img)

LENGTH = len(contours)

status = np.zeros((LENGTH,1))

print("Elements:", len(contours))

for i,cnt1 in enumerate(contours):

x = i

if i != LENGTH-1:

for j,cnt2 in enumerate(contours[i+1:]):

x = x+1

dist = find_if_close(cnt1,cnt2)

if dist == True:

val = min(status[i],status[x])

status[x] = status[i] = val

else:

if status[x]==status[i]:

status[x] = i+1

unified = []

maximum = int(status.max())+1

for i in range(maximum):

pos = np.where(status==i)[0]

if pos.size != 0:

cont = np.vstack(contours[i] for i in pos)

hull = cv2.convexHull(cont)

unified.append(hull)

cv2.drawContours(img,contours,-1,(0,0,255),1)

cv2.drawContours(img,unified,-1,(0,255,0),2)

#cv2.drawContours(thresh,unified,-1,255,-1)

for c in unified:

(x,y,w,h) = cv2.boundingRect(c)

cv2.rectangle(img, (x,y), (x+w,y+h), (255, 0, 0), 2)

cv2.imshow('result', img)

cv2.waitKey(0)

cv2.destroyAllWindows()

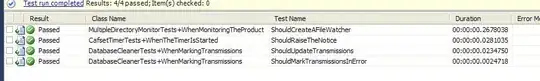

Sample output (the yellow blob is below the binary threshold conversion so it's ignored). Red: original contours, green: unified ones, blue: bounding boxes.

Probably there is no need to use MSER as a simple findContours may work fine.

------------------------

Starting from here there is my old answer, before I found the above code. I'm leaving it anyway as it describe a couple of different approaches that may be easier/more appropriate for some scenarios.

A quick and dirty trick is to add a small gaussian blur and a high threshold before the MSER (or some dilute/erode if you prefer fancy things). In practice you just make the text bolder so that it fills small gaps. Obviously you can later discard this version and crop from the original one.

Otherwise, if your text is in lines, you may try to detect the average line center (make an histogram of Y coordinates and find the peaks for example). Then, for each line, look for fragments with a close average X. Quite fragile if text is noisy/complex.

If you do not need to split each letter, getting the bounding box for the whole word, may be easier: just split in groups based on a maximum horizontal distance between fragments (using the leftmost/rightmost points of the contour). Then use the leftmost and rightmost boxes within each group to find the whole bounding box. For multiline text first group by centroids Y coordinate.

Implementation notes:

Opencv allows you to create histograms but you probably can get away with something like this (worked for me on a similar task):

def histogram(vals, th=4, bins=400):

hist = np.zeros(bins)

for y_center in vals:

bucket = int(round(y_center / 2.)) <-- change this "2."

hist[bucket-1] += 1

print("hist: ", hist)

hist = np.where(hist > th, hist, 0)

return hist

Here my histogram is just an array with 400 buckets (my image was 800px high so each bucket catches two pixels, that is where the "2." comes from). Vals are the Y coordinates of the centroids of each fragment (you may want to ignore very small elements when you build this list). The th threshold is there just to remove some noise. You should get something like this:

0,0,0,5,22,0,0,0,0,43,7,0,0,0

This list describes, moving top to bottom, how many fragments are at each location.

Now I ran another pass to merge the peaks into a single value (just scan the array and sum while it is non-zero and reset the count on first zero) getting something like this {y:count}:

{9:27, 20:50}

Now I know I have two text rows at y=9 and y=20. Now, or before, you assign each fragment to on line (with again an 8px threshold in my case). Now you can process each line on its own, finding "words". BTW, I have your identical problem with broken letters that's why I came here looking for MSER :). Notice that if you find the whole bounding box for the word this problem happens only on the first/last letters: the other broken letters just falls inside the word box anyway.

Here is a reference for the erode/dilate thing, but gaussian blur/th worked for me.

UPDATE: I've noticed that there is something wrong in this line:

regions = mser.detectRegions(thresh)

I pass in the already thresholded image(!?). This is not relevant for the aggregation part but keep in mind that the mser part is not being used as expected.