I am new to Python and OpenCV . I am currently working on OCR using Python and OpenCV without using Tesseract.Till now I have been successful in detecting the text (character and digit) but I am encountering a problem to detect space between words. Eg- If the image says "Hello John", then it detects hello john but cannot detect space between them, so my output is "HelloJohn" without any space between them.My code for extracting contour goes like this(I have imported all the required modules, this one is the main module extracting contour) :

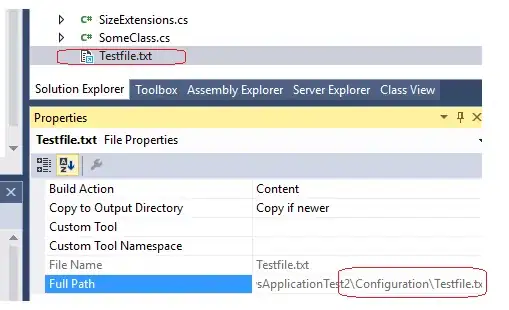

imgGray = cv2.cvtColor(imgTrainingNumbers, cv2.COLOR_BGR2GRAY)

imgBlurred = cv2.GaussianBlur(imgGray, (5,5), 0)

imgThresh = cv2.adaptiveThreshold(imgBlurred,

255,

cv2.ADAPTIVE_THRESH_GAUSSIAN_C,

cv2.THRESH_BINARY_INV,

11,

2)

cv2.imshow("imgThresh", imgThresh)

imgThreshCopy = imgThresh.copy()

imgContours, npaContours, npaHierarchy = cv2.findContours(imgThreshCopy,

cv2.RETR_EXTERNAL,

cv2.CHAIN_APPROX_SIMPLE)

After this I classify the extracted contours which are digits and character. Please help me detecting space between them. Thank You in advance,your reply would be really helpful.