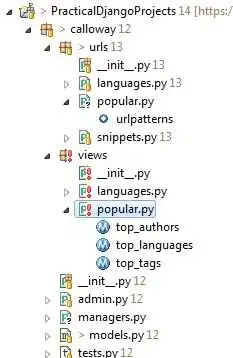

I'm using Spark to run a grid search job using spark sklearn package. Here's my config

NUM_SLAVES = 14

DRIVER_SPARK_MEMORY=53 # "spark.driver.memory"

EXECUTOR_SPARK_MEMORY=5 # "spark.executor.memory"

EXECUTOR_CORES=1 #"spark.executor.cores"

DRIVER_CORES=1 # "spark.driver.cores"

EXECUTOR_INSTANCES=126 # "spark.executor.instances"

SPARK_DYNAMICALLOCATION_ENABLED=false # "spark.dynamicAllocation.enabled"

SPARK_YARN_EXECUTOR_MEMORYOVERHEAD=1024 # "spark.yarn.executor.memoryOverhead"

SPARK_YARN_DRIVER_MEMORYOVERHEAD=6144 # "spark.yarn.driver.memoryOverhead"

SPARK_DEFAULT_PARALLELISM=252 # "spark.default.parallelism"

This is reflected in the configuration settings on AWS EMR

Each slave has 60 GB memory (c4.8xlarge) and the driver is the same machine. They have 36 VCPUs and I read on AWS that this means 18 cores. Based on this I've the above config that minimizes my unused memory.

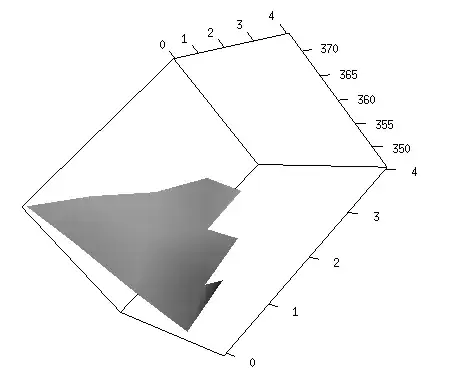

The above picture shows the cluster metrics.

I dont know why just 43 cores out of 495 available are used, although the memory is used properly. I'm using the DominantResourceCalculator as suggested in this question.

Can someone suggest what am I missing here?