I'm training the Keras object detection model linked at the bottom of this question, although I believe my problem has to do neither with Keras nor with the specific model I'm trying to train (SSD), but rather with the way the data is passed to the model during training.

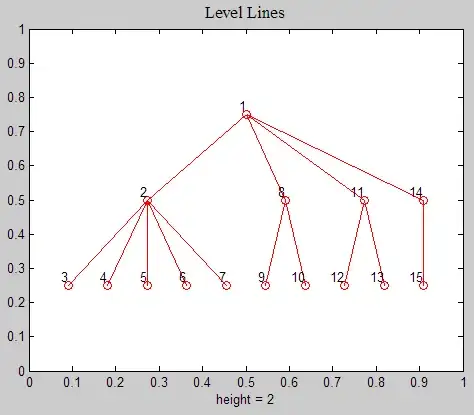

Here is my problem (see image below): My training loss is decreasing overall, but it shows sharp regular spikes:

The unit on the x-axis is not training epochs, but tens of training steps. The spikes occur precisely once every 1390 training steps, which is exactly the number of training steps for one full pass over my training dataset.

The fact that the spikes always occur after each full pass over the training dataset makes me suspect that the problem is not with the model itself, but with the data it is being fed during the training.

I'm using the batch generator provided in the repository to generate batches during training. I checked the source code of the generator and it does shuffle the training dataset before each pass using sklearn.utils.shuffle.

I'm confused for two reasons:

- The training dataset is being shuffled before each pass.

- As you can see in this Jupyter notebook, I'm using the generator's ad-hoc data augmentation features, so the dataset should theoretically never be same for any pass: All the augmentations are random.

I made some test predictions to see if the model is actually learning anything, and it is! The predictions get better over time, but of course the model is learning very slowly since those spikes seem to mess up the gradient every 1390 steps.

Any hints as to what this might be are greatly appreciated! I'm using the exact same Jupyter notebook that is linked above for my training, the only variable I changed is the batch size from 32 to 16. Other than that, the linked notebook contains the exact training process I'm following.

Here is a link to the repository that contains the model: