I have written a parallel program based on CUDA in Windows (GeForce GT 720M). I have installed the CUDA 9.0 Toolkit and the Visual Studio 2013. Everything is OK but when I compile the code and run it the output is wrong.

The program is:

#include <stdio.h>

#include "cuda_runtime.h"

#include "device_launch_parameters.h"

__global__ void square(float * d_out, float * d_in)

{

int idx = threadIdx.x;

float f = d_in[idx];

d_out[idx] = 50;

}

int main(int argc, char ** argv)

{

const int ARRAY_SIZE = 64;

const int ARRAY_BYTES = ARRAY_SIZE * sizeof(float);

// generate the input array on the host

float h_in[ARRAY_SIZE];

for (int i = 0; i < ARRAY_SIZE; i++)

{

h_in[i] = float(i);

}

float h_out[ARRAY_SIZE];

// declare GPU memory pointers

float * d_in;

float * d_out;

// allocate GPU memory

cudaMalloc((void **) &d_in, ARRAY_BYTES);

cudaMalloc((void **) &d_out, ARRAY_BYTES);

// transfer the array to the GPU

cudaMemcpy(d_in, h_in, ARRAY_BYTES, cudaMemcpyHostToDevice);

// launch the Kernel

square << <1, ARRAY_SIZE >> >(d_out, d_in);

// copy back the result array to the GPU

cudaMemcpy(h_out, d_out, ARRAY_BYTES, cudaMemcpyDeviceToHost);

// print out the resulting array

for (int i = 0; i < ARRAY_SIZE; i++)

{

printf("%f", h_out[i]);

printf(((i % 4) != 3) ? "\t" : "\n");

}

// free GPU memory allocation

cudaFree(d_in);

cudaFree(d_out);

getchar();

return 0;

}

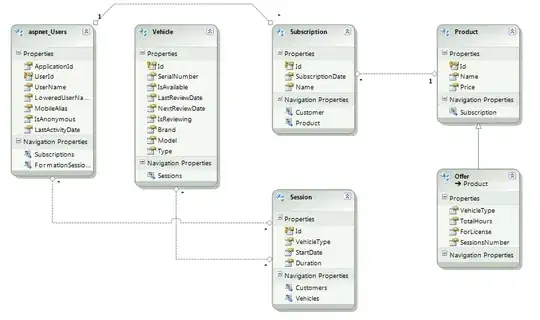

Also, I compiled it with the nvcc square.cu but the output is the same. I have the kernel launch syntax error in the VS but I think it is not related to the output (but the image is related to another program):