Here's some C99 source implementing the traditional approach (based on OpenCV doco):

#include "cv.h"

#include "highgui.h"

#include <stdio.h>

#ifndef M_PI

#define M_PI 3.14159265358979323846

#endif

//

// We need this to be high enough to get rid of things that are too small too

// have a definite shape. Otherwise, they will end up as ellipse false positives.

//

#define MIN_AREA 100.00

//

// One way to tell if an object is an ellipse is to look at the relationship

// of its area to its dimensions. If its actual occupied area can be estimated

// using the well-known area formula Area = PI*A*B, then it has a good chance of

// being an ellipse.

//

// This value is the maximum permissible error between actual and estimated area.

//

#define MAX_TOL 100.00

int main( int argc, char** argv )

{

IplImage* src;

// the first command line parameter must be file name of binary (black-n-white) image

if( argc == 2 && (src=cvLoadImage(argv[1], 0))!= 0)

{

IplImage* dst = cvCreateImage( cvGetSize(src), 8, 3 );

CvMemStorage* storage = cvCreateMemStorage(0);

CvSeq* contour = 0;

cvThreshold( src, src, 1, 255, CV_THRESH_BINARY );

//

// Invert the image such that white is foreground, black is background.

// Dilate to get rid of noise.

//

cvXorS(src, cvScalar(255, 0, 0, 0), src, NULL);

cvDilate(src, src, NULL, 2);

cvFindContours( src, storage, &contour, sizeof(CvContour), CV_RETR_CCOMP, CV_CHAIN_APPROX_SIMPLE, cvPoint(0,0));

cvZero( dst );

for( ; contour != 0; contour = contour->h_next )

{

double actual_area = fabs(cvContourArea(contour, CV_WHOLE_SEQ, 0));

if (actual_area < MIN_AREA)

continue;

//

// FIXME:

// Assuming the axes of the ellipse are vertical/perpendicular.

//

CvRect rect = ((CvContour *)contour)->rect;

int A = rect.width / 2;

int B = rect.height / 2;

double estimated_area = M_PI * A * B;

double error = fabs(actual_area - estimated_area);

if (error > MAX_TOL)

continue;

printf

(

"center x: %d y: %d A: %d B: %d\n",

rect.x + A,

rect.y + B,

A,

B

);

CvScalar color = CV_RGB( rand() % 255, rand() % 255, rand() % 255 );

cvDrawContours( dst, contour, color, color, -1, CV_FILLED, 8, cvPoint(0,0));

}

cvSaveImage("coins.png", dst, 0);

}

}

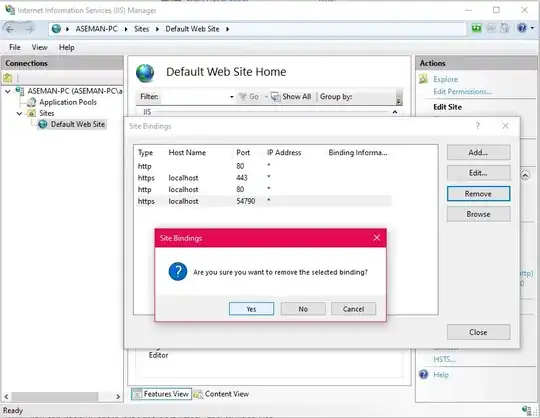

Given the binary image that Carnieri provided, this is the output:

./opencv-contour.out coin-ohtsu.pbm

center x: 291 y: 328 A: 54 B: 42

center x: 286 y: 225 A: 46 B: 32

center x: 471 y: 221 A: 48 B: 33

center x: 140 y: 210 A: 42 B: 28

center x: 419 y: 116 A: 32 B: 19

And this is the output image:

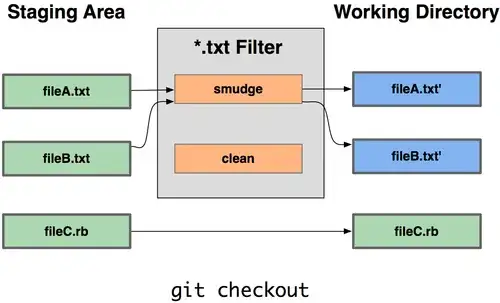

What you could improve on:

- Handle different ellipse orientations (currently, I assume the axes are perpendicular/horizontal). This would not be hard to do using image moments.

- Check for object convexity (have a look at

cvConvexityDefects)

Your best way of distinguishing coins from other objects is probably going to be by shape. I can't think of any other low-level image features (color is obviously out). So, I can think of two approaches:

Traditional object detection

Your first task is to separate the objects (coins and non-coins) from the background. Ohtsu's method, as suggested by Carnieri, will work well here. You seem to worry about the images being bipartite but I don't think this will be a problem. As long as there is a significant amount of desk visible, you're guaranteed to have one peak in your histogram. And as long as there are a couple of visually distinguishable objects on the desk, you are guaranteed your second peak.

Dilate your binary image a couple of times to get rid of noise left by thresholding. The coins are relatively big so they should survive this morphological operation.

Group the white pixels into objects using region growing -- just iteratively connect adjacent foreground pixels. At the end of this operation you will have a list of disjoint objects, and you will know which pixels each object occupies.

From this information, you will know the width and the height of the object (from the previous step). So, now you can estimate the size of the ellipse that would surround the object, and then see how well this particular object matches the ellipse. It may be easier just to use width vs height ratio.

Alternatively, you can then use moments to determine the shape of the object in a more precise way.