I am trying to extract the tiles ( Letters ) placed on a Scrabble Board. The goal is to identify / read all possible words present on the board.

Ideally, I would like to find the four corners of the scrabble Board, and apply perspective transform, for further processing.

The algorithm that I am using is as follows -

Apply Adaptive thresholding to the gray scale image of the Scrabble Board.

Dilate / Close the image, find the largest contour in the given image, then find the convex hull, and completely fill the area enclosed by the convex hull.

Find the boundary points ( contour ) of the resultant image, then apply Contour approximation to get the corner points, then apply perspective transform

This approach works with images like these. But, as you can see, many square boards have a base, which is curved at the top and the bottom. Sometimes, the base is a big circular board. And with these images my approach fails. Example images and outputs -

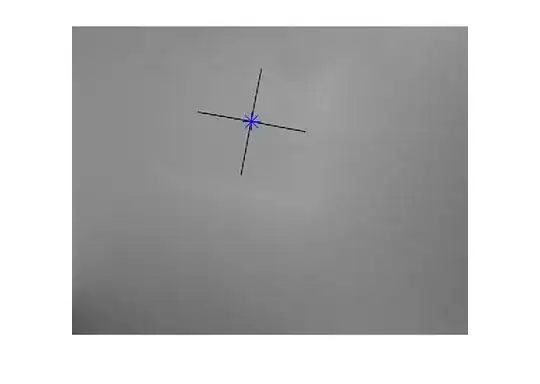

Board with Circular base:

Points found using above approach:

I can post more such problematic images, but this image should give you an idea about the problem that I am dealing with. My question is -

How do I find the rectangular board when a circular board is also present in the image?

Some points I would like to state -

I tried using hough lines to detect the lines in the image, find the largest vertical line(s), and then find their intersections to detect the corner points. Unfortunately, because of the tiles, all lines seem to be distorted / disconnected, and hence my attempts have failed.

I have also tried to apply contour approximation to all the contours found in the image ( I was assuming that the large rectangle, too, would be a contour ), but that approach failed as well.

I have implemented the solution in openCV-python. Since the approach is what matters here, and the question was becoming a tad too long, I didn't post the relevant code.

I am willing to share more such problematic images as well, if it is required. Thank you!

EDIT1 @Silencer's answer has been mighty helpful to me for identifying letters in the image, but I want to accurately find the placement of the words in the image. Hence, I feel identifying the rows and columns is necessary, and I can do that only when a perspective transform is applied to the board.