I have implemented VI (Value Iteration), PI (Policy Iteration), and QLearning algorithms using python. After comparing results, I noticed something. VI and PI algorithms converge to same utilities and policies. With same parameters, QLearning algorithm converge to different utilities, but same policies with VI and PI algorithms. Is this something normal? I read a lot of papers and books about MDP and RL, but couldn't find anything which tells if utilities of VI-PI algorithms should converge to same utilities with QLearning or not.

Following information is about my grid world and results.

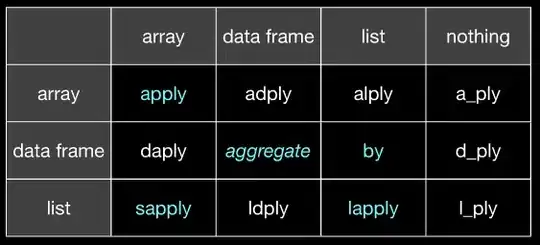

MY GRID WORLD

- States => {s0, s1, ... , s10}

- Actions => {a0, a1, a2, a3} where: a0 = Up, a1 = Right, a2 = Down, a3 = Left for all states

- There are 4 terminal states, which have +1, +1, -10, +10 rewards.

- Initial state is s6

- Transition probability of an action is P, and (1 - p) / 2 to go left or right side of that action. (For example: If P = 0.8, when agent tries to go UP, with 80% chance agent will go UP, and with 10% chance agent will go RIGHT, and 10% LEFT.)

RESULTS

- VI and PI algorithm results with Reward = -0.02, Discount Factor = 0.8, Probability = 0.8

- VI converges after 50 iterations, PI converges after 3 iteration

- QLearning algorithm results with Reward = -0.02, Discount Factor = 0.8, Learning Rate = 0.1, Epsilon (For exploration) = 0.1

- Resulting utilities on the image of QLearning results are the maximum Q(s, a) pairs of each state.

qLearning_1million_10million_iterations_results.png

In addition, I also noticed that, when QLearning does 1 million iterations, states which are equally far away from the +10 rewarded terminal have the same utilities. Agent seems it does not care if it is going to the reward from a path which is near to -10 terminal or not, while agent cares about it on VI and PI algorithms. Is this because, in QLearning, we don't know the transition probability of environment?