I am writing a piece of code that figures out what frequencies(notes) are being played at any given time of a song (note currently I am testing it grabbing only the first second of the song). To do this I break the first second of the audio file into 8 different chunks. Then I perform an FFT on each chunk and plot it with the following code:

% Taking a second of an audio file and breaking it into n many chunks and

% figuring out what frequencies make up each of those chunks

clear all;

% Read Audio

fs = 44100; % sample frequency (Hz)

full = audioread('song.wav');

% Perform fft and get frequencies

chunks = 8; % How many chunks to break wave into

for i = 1:chunks

beginningChunk = (i-1)*fs/chunks+1

endChunk = i*fs/chunks

x = full(beginningChunk:endChunk);

y = fft(x);

n = length(x); % number of samples in chunk

amp = abs(y)/n; % amplitude of the DFT

%%%amp = amp(1:fs/2/chunks); % note this is my attempt that I think is wrong

f = (0:n-1)*(fs/n); % frequency range

%%%f = f(1:fs/2/chunks); % note this is my attempt that I think is wrong

figure(i);

plot(f,amp)

xlabel('Frequency')

ylabel('amplitude')

end

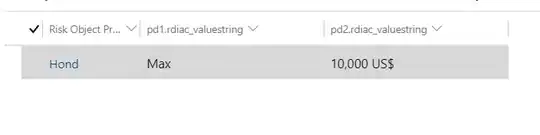

When I do that I get graphs that look like these:

It looks like I am plotting too many points because the frequencies go up in magnitude at the far right of graphs so I think I am using the double sided spectrum. I think I need to only use the samples from 1:fs/2, the problem is I don't have a big enough matrix to grab that many points. I tried going from 1:fs/2/chunks, but I am unconvinced those are the right values so I commented those out. How can I find the single sided spectrum when there are less than fs/2 samples?

As a side note when I plot all the graphs I notice the frequencies given are almost exactly the same. This is surprising to me because I thought I made the chunks small enough that only the frequency that's happening at the exact time should be grabbed -- and therefore I would be getting the current note being played. If anyone knows how I can single out what note is being played at each time better that information would be greatly appreciated.