I was trying to understand the that does the rehashing of hashmap takes place while exceeding the number of buckets occupied or the total number of entries in all buckets. Means, we know that if 12 out of 16 (One entry in each bucket) of buckets are full (Considering default loadfactor and initial capacity), then we know on the next entry the hashmap will be rehashed. But what about that case when suppose only 3 buckets are occupied with 4 entries each (Total 12 entries but only 3 buckets out of 16 in use)?

So I tried to replicate this by making the worst hash function which will put all entries in a single bucket.

Here is my code.

class X {

public Integer value;

public X(Integer value) {

super();

this.value = value;

}

@Override

public int hashCode() {

return 1;

}

@Override

public boolean equals(Object obj) {

X a = (X) obj;

if(this.value.equals(a.value)) {

return true;

}

return false;

}

}

Now I started putting values in hashmap.

HashMap<X, Integer> map = new HashMap<>();

map.put(new X(1), 1);

map.put(new X(2), 2);

map.put(new X(3), 3);

map.put(new X(4), 4);

map.put(new X(5), 5);

map.put(new X(6), 6);

map.put(new X(7), 7);

map.put(new X(8), 8);

map.put(new X(9), 9);

map.put(new X(10), 10);

map.put(new X(11), 11);

map.put(new X(12), 12);

map.put(new X(13), 13);

System.out.println(map.size());

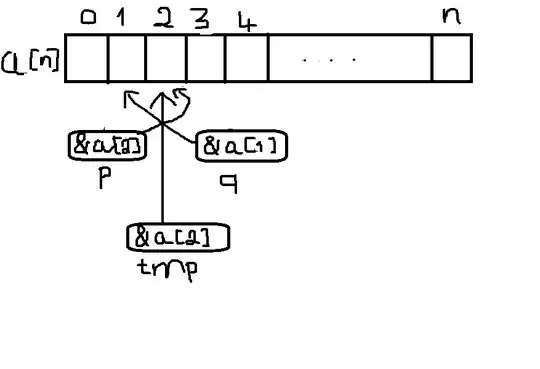

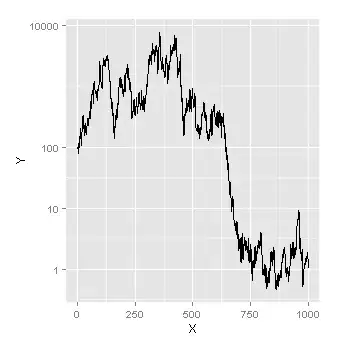

All the nodes were entering the single bucket as expected, but I noticed that on the 9th entry, the hashmap rehashed and doubled its capacity. Now on the 10th entry, It again doubled its capacity.

Can anyone explain how this is happening?

Thanks in advance.