I have a project that invokes android's default speech recognition system, using RecognizerIntent.ACTION_RECOGNIZE_SPEECH

The UI is 1 textview and 1 command button, to show the result of speech recognition and to start speech recognition, respectively

I also record the input to the speech recogniser in an AMR file using the method shown here:

record/save audio from voice recognition intent

This is possible because the input audio tot he speech recogniser is available as an InputStream in the Intent data returned by the speech recogniser activity

However, I have noticed that when the speech recogniser activity fails to recognise speech (different from incorrect recognition), the onActivityResult(...) function is still called, but it does not return the Intent data, causing NullPointerException when trying to read the AMR file. How do I get the input to the speech recogniser when it fails to recognise speech?

Code:

import android.content.ContentResolver;

import android.content.Intent;

import android.net.Uri;

import android.os.Environment;

import android.speech.RecognizerIntent;

import android.support.v7.app.AppCompatActivity;

import android.os.Bundle;

import android.util.Log;

import android.view.View;

import android.widget.Button;

import android.widget.TextView;

import android.widget.Toast;

import java.io.File;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.InputStream;

import java.io.OutputStream;

import java.util.ArrayList;

import java.util.Locale;

public class MainActivity extends AppCompatActivity {

Button btnSpeak;

TextView txtViewResult;

private static final int VOICE_RECOGNITION = 1;

String saveFileLoc = Environment.getExternalStorageDirectory().getPath();

File fileAmrFile = new File(saveFileLoc+"/recordedSpeech.amr");

OutputStream outputStream = null;

InputStream filestream = null;

Uri audioUri = null;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

btnSpeak = (Button)findViewById(R.id.button1);

txtViewResult = (TextView)findViewById(R.id.textView1);

if(fileAmrFile.exists() ){

fileAmrFile.delete();

}

runprog();

} // protected void onCreate(Bundle savedInstanceState) { CLOSED

private void runprog(){

btnSpeak.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View view) {

// Toast.makeText(getApplicationContext(), "Button pressed", Toast.LENGTH_LONG).show();

// Fire an intent to start the speech recognition activity.

Intent intent = new Intent(RecognizerIntent.ACTION_RECOGNIZE_SPEECH);

// Specify free form input

intent.putExtra(RecognizerIntent.EXTRA_LANGUAGE_MODEL, RecognizerIntent.LANGUAGE_MODEL_FREE_FORM);

intent.putExtra(RecognizerIntent.EXTRA_PROMPT,"Please start speaking");

intent.putExtra(RecognizerIntent.EXTRA_MAX_RESULTS, 1);

intent.putExtra(RecognizerIntent.EXTRA_LANGUAGE, Locale.ENGLISH);

// secret parameters that when added provide audio url in the result

intent.putExtra("android.speech.extra.GET_AUDIO_FORMAT", "audio/AMR");

intent.putExtra("android.speech.extra.GET_AUDIO", true);

startActivityForResult(intent, VOICE_RECOGNITION);

}

});

} // private void runprog(){ CLOSED

// handle result of speech recognition

@Override

public void onActivityResult(int requestCode, int resultCode, Intent data) {

// if (requestCode == VOICE_RECOGNITION && resultCode == RESULT_OK) {

if (requestCode == VOICE_RECOGNITION ) {

ArrayList<String> results;

results = data.getStringArrayListExtra(RecognizerIntent.EXTRA_RESULTS);

txtViewResult.setText(results.get(0));

Log.v("MYLOG", "Speech 2 text");

// the required audio will be returned by getExtras:

Bundle bundle = data.getExtras();

ArrayList<String> matches = bundle.getStringArrayList(RecognizerIntent.EXTRA_RESULTS);

// Toast.makeText(getApplicationContext(), "HEREHERE", Toast.LENGTH_LONG).show();

Log.v("MYLOG", "B4 Uri creation");

// /*

// the recording url is in getData:

audioUri = data.getData();

ContentResolver contentResolver = getContentResolver();

try {

filestream = contentResolver.openInputStream(audioUri);

} catch (FileNotFoundException e) {

e.printStackTrace();

}

// TODO: read audio file from inputstream

try {

outputStream = new FileOutputStream(new File(saveFileLoc + "/recordedSpeech.wav"));

} catch (FileNotFoundException e) {

e.printStackTrace();

}

try {

int read = 0;

byte[] bytes = new byte[1024];

while ((read = filestream.read(bytes)) != -1) {

outputStream.write(bytes, 0, read);

}

// System.out.println("Done!");

Toast.makeText(getApplicationContext(), "Done", Toast.LENGTH_LONG).show();

} catch (IOException e) {

e.printStackTrace();

} finally {

if (filestream != null) {

try {

filestream.close();

} catch (IOException e) {

e.printStackTrace();

}

}

if (outputStream != null) {

try {

// outputStream.flush();

outputStream.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

// */

}

} // public void onActivityResult(int requestCode, int resultCode, Intent data) { CLOSED

} // protected void onCreate(Bundle savedInstanceState) { CLOSED

layout.xml:

<?xml version="1.0" encoding="utf-8"?>

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

android:layout_width="fill_parent"

android:layout_height="fill_parent"

android:orientation="vertical" >

<TextView

android:id="@+id/textView1"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:text="@string/spoken" />

<Button

android:id="@+id/button1"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_alignLeft="@+id/textView1"

android:layout_below="@+id/textView1"

android:text="@string/button_text"

/>

</LinearLayout>

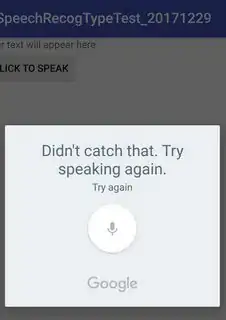

For reference, this is what I mean when I said the speech recogniser fails to recognise speech (Note that this is different from when the speech recogniser detects wrongly; in case of a wrong detection, it is still recognising speech)

Phone details:

Model : Samsung Galaxy S4

Model No : SHV-E330L

Android version : 5.01