tl;dr: When I threshold an image with a specific threshold in Swift, I get clean segmentation (and double checking it in Matlab perfectly matches), but when I do it in a Core Image kernel, it doesn't segment cleanly. Do I have a bug in my kernel?

I'm trying to threshold with a Core Image kernel. My code seems simple enough:

class ThresholdFilter: CIFilter

{

var inputImage : CIImage?

var threshold: Float = 0.554688 // This is set to a good value via Otsu's method

var thresholdKernel = CIColorKernel(source:

"kernel vec4 thresholdKernel(sampler image, float threshold) {" +

" vec4 pixel = sample(image, samplerCoord(image));" +

" const vec3 rgbToIntensity = vec3(0.114, 0.587, 0.299);" +

" float intensity = dot(pixel.rgb, rgbToIntensity);" +

" return intensity < threshold ? vec4(0, 0, 0, 1) : vec4(1, 1, 1, 1);" +

"}")

override var outputImage: CIImage! {

guard let inputImage = inputImage,

let thresholdKernel = thresholdKernel else {

return nil

}

let extent = inputImage.extent

let arguments : [Any] = [inputImage, threshold]

return thresholdKernel.apply(extent: extent, arguments: arguments)

}

}

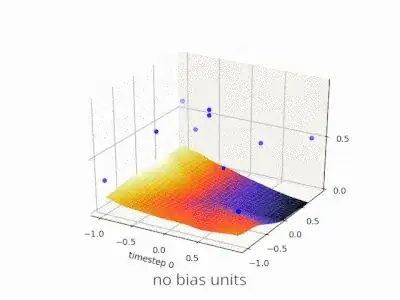

And images like this simple leaf:

get properly thresholded:

get properly thresholded:

But some images, like this (with a muddier background):

Become garbage:

Become garbage:

I don't think it's simply a matter of choosing a poor threshold, as I can use this exact same threshold in Matlab and get a clean segmentation:

To double check, I "redid" the kernel in outputImage in pure Swift, just printing to the console:

let img: CGImage = inputImage.cgImage!

let imgProvider: CGDataProvider = img.dataProvider!

let imgBitmapData: CFData = imgProvider.data!

var imgBuffer = vImage_Buffer(data: UnsafeMutableRawPointer(mutating: CFDataGetBytePtr(imgBitmapData)), height: vImagePixelCount(img.height), width: vImagePixelCount(img.width), rowBytes: img.bytesPerRow)

for i in 0...img.height {

for j in 0...img.width {

let test = imgBuffer.data.load(fromByteOffset: (i * img.width + j) * 4, as: UInt32.self)

let r = Float((test >> 16) & 255) / 256

let g = Float((test >> 8) & 255) / 256

let b = Float(test & 255) / 256

let intensity = 0.114 * r + 0.587 * g + 0.299 * b

print(intensity > threshold ? "1" : "0", terminator: "")

}

print("")

}

And this prints a cleanly segmented image in 0s and 1s. I can't zoom out far enough to get it on my screen all at once, but you can see the hole in the leaf clearly segmented:

I was worried that pixel intensities might be different between Matlab and the kernel (since RGB to intensity can be done in different ways), so I used this console-printing method to check the exact intensities of different pixels, and they all matched the intensities I'm seeing for the same image in Matlab. As I'm using the same dot product between Swift and the kernel, I'm at a loss for why this threshold would work in Swift and Matlab, but not in the kernel.

Any ideas what's going on?