I went ahead and vectorized the OP's code using GCC's and Clang's vector extensions. Before I show how I did this let me show the performance with the following hardware:

Skylake (SKL) at 3.1 GHz with 4 cores

Knights Landing (KNL) at 1.5 GHz with 68 cores

ARMv8 Cortex-A57 arch64 (Nvidia Jetson TX1) 4 cores at ? GHz

nb_iter = 1000000

GCC Clang

SKL_scalar 6m5,422s

SKL_SSE41 3m18,058s

SKL_AVX2 1m37,843s 1m39,943s

SKL_scalar_omp 0m52,237s

SKL_SSE41_omp 0m29,624s 0m31,356s

SKL_AVX2_omp 0m14,156s 0m16,783s

ARM_scalar 15m28.285s

ARM_vector 9m26.384s

ARM_scalar_omp 3m54.242s

ARM_vector_omp 2m21.780s

KNL_scalar 19m34.121s

KNL_SSE41 11m30.280s

KNL_AVX2 5m0.005s 6m39.568s

KNL_AVX512 2m40.934s 6m20.061s

KNL_scalar_omp 0m9.108s

KNL_SSE41_omp 0m6.666s 0m6.992s

KNL_AVX2_omp 0m2.973s 0m3.988s

KNL_AVX512_omp 0m1.761s 0m3.335s

The theoretical speed up of KNL vs. SKL is

(68 cores/4 cores)*(1.5 GHz/3.1 Ghz)*

(8 doubles per lane/4 doubles per lane) = 16.45

I went into detail about GCC's and Clang's vector extensions capabilities here. To vectorize the OP's code here are three additional vector operations that we need to define.

1. Broadcasting

For a vector v and a scalar s GCC cannot do v = s but Clang can. But I found a nice solution which works for GCC and Clang here. For example

vsi v = s - (vsi){};

2. A any() function like in OpenCL or like in R.

The best I came up with is a generic function

static bool any(vli const & x) {

for(int i=0; i<VLI_SIZE; i++) if(x[i]) return true;

return false;

}

Clang actually generates relatively efficient code for this using the ptest instruction (but not for AVX512) but GCC does not.

3. Compression

The calculations are done as 64-bit doubles but the result is written out as 32-bit integers. So two calculations are done using 64-bit integers and then the two calculations are compressed into one vector of 32-bit integers. I came up with a generic solution which Clang does a good job with

static vsi compress(vli const & lo, vli const & hi) {

vsi lo2 = (vsi)lo, hi2 = (vsi)hi, z;

for(int i=0; i<VLI_SIZE; i++) z[i+0*VLI_SIZE] = lo2[2*i];

for(int i=0; i<VLI_SIZE; i++) z[i+1*VLI_SIZE] = hi2[2*i];

return z;

}

The follow solution works better for GCC but is no better for Clang. But since this function is not critical I just use the generic version.

static vsi compress(vli const & low, vli const & high) {

#if defined(__clang__)

return __builtin_shufflevector((vsi)low, (vsi)high, MASK);

#else

return __builtin_shuffle((vsi)low, (vsi)high, (vsi){MASK});

#endif

}

These definitions don't rely on anything x86 specific and the code (defined below) compiles for ARM processors as well with GCC and Clang.

Now that these are defined here is the code

#include <string.h>

#include <inttypes.h>

#include <Rcpp.h>

using namespace Rcpp;

#ifdef _OPENMP

#include <omp.h>

#endif

// [[Rcpp::plugins(openmp)]]

// [[Rcpp::plugins(cpp14)]]

#if defined ( __AVX512F__ ) || defined ( __AVX512__ )

static const int SIMD_SIZE = 64;

#elif defined ( __AVX2__ )

static const int SIMD_SIZE = 32;

#else

static const int SIMD_SIZE = 16;

#endif

static const int VSI_SIZE = SIMD_SIZE/sizeof(int32_t);

static const int VLI_SIZE = SIMD_SIZE/sizeof(int64_t);

static const int VDF_SIZE = SIMD_SIZE/sizeof(double);

#if defined(__clang__)

typedef int32_t vsi __attribute__ ((ext_vector_type(VSI_SIZE)));

typedef int64_t vli __attribute__ ((ext_vector_type(VLI_SIZE)));

typedef double vdf __attribute__ ((ext_vector_type(VDF_SIZE)));

#else

typedef int32_t vsi __attribute__ ((vector_size (SIMD_SIZE)));

typedef int64_t vli __attribute__ ((vector_size (SIMD_SIZE)));

typedef double vdf __attribute__ ((vector_size (SIMD_SIZE)));

#endif

static bool any(vli const & x) {

for(int i=0; i<VLI_SIZE; i++) if(x[i]) return true;

return false;

}

static vsi compress(vli const & lo, vli const & hi) {

vsi lo2 = (vsi)lo, hi2 = (vsi)hi, z;

for(int i=0; i<VLI_SIZE; i++) z[i+0*VLI_SIZE] = lo2[2*i];

for(int i=0; i<VLI_SIZE; i++) z[i+1*VLI_SIZE] = hi2[2*i];

return z;

}

// [[Rcpp::export]]

IntegerVector frac(double x_min, double x_max, double y_min, double y_max, int res_x, int res_y, int nb_iter) {

IntegerVector out(res_x*res_y);

vdf x_minv = x_min - (vdf){}, y_minv = y_min - (vdf){};

vdf x_stepv = (x_max - x_min)/res_x - (vdf){}, y_stepv = (y_max - y_min)/res_y - (vdf){};

double a[VDF_SIZE] __attribute__ ((aligned(SIMD_SIZE)));

for(int i=0; i<VDF_SIZE; i++) a[i] = 1.0*i;

vdf vi0 = *(vdf*)a;

#pragma omp parallel for schedule(dynamic) collapse(2)

for (int r = 0; r < res_y; r++) {

for (int c = 0; c < res_x/(VSI_SIZE); c++) {

vli nv[2] = {0 - (vli){}, 0 - (vli){}};

for(int j=0; j<2; j++) {

vdf c2 = 1.0*VDF_SIZE*(2*c+j) + vi0;

vdf zx = 0.0 - (vdf){}, zy = 0.0 - (vdf){}, new_zx;

vdf cx = x_minv + c2*x_stepv, cy = y_minv + r*y_stepv;

vli t = -1 - (vli){};

for (int n = 0; any(t = zx*zx + zy*zy < 4.0) && n < nb_iter; n++, nv[j] -= t) {

new_zx = zx*zx - zy*zy + cx;

zy = 2.0*zx*zy + cy;

zx = new_zx;

}

}

vsi sp = compress(nv[0], nv[1]);

memcpy(&out[r*res_x + VSI_SIZE*c], (int*)&sp, SIMD_SIZE);

}

}

return out;

}

The R code is almost the same as the OP's code

library(Rcpp)

sourceCpp("frac.cpp", verbose=TRUE, rebuild=TRUE)

xlims=c(-0.74877,-0.74872);

ylims=c(0.065053,0.065103);

x_res=y_res=1080L; nb_iter=100000L;

t = system.time(m <- frac(xlims[[1]], xlims[[2]], ylims[[1]], ylims[[2]], x_res, y_res, nb_iter))

print(t)

m2 = matrix(m, ncol = x_res)

rainbow = c(

rgb(0.47, 0.11, 0.53),

rgb(0.27, 0.18, 0.73),

rgb(0.25, 0.39, 0.81),

rgb(0.30, 0.57, 0.75),

rgb(0.39, 0.67, 0.60),

rgb(0.51, 0.73, 0.44),

rgb(0.67, 0.74, 0.32),

rgb(0.81, 0.71, 0.26),

rgb(0.89, 0.60, 0.22),

rgb(0.89, 0.39, 0.18),

rgb(0.86, 0.13, 0.13)

)

cols = c(colorRampPalette(rainbow)(100),

rev(colorRampPalette(rainbow)(100)),"black") # palette

par(mar = c(0, 0, 0, 0))

image(m2^(1/7), col=cols, asp=diff(ylims)/diff(xlims), axes=F, useRaster=T)

To compile for GCC or Clang change the file ~/.R/Makevars to

CXXFLAGS= -Wall -std=c++14 -O3 -march=native -ffp-contract=fast -fopenmp

#uncomment the following two lines for clang

#CXX=clang-5.0

#LDFLAGS= -lomp

If you are having trouble getting OpenMP to work for Clang see this.

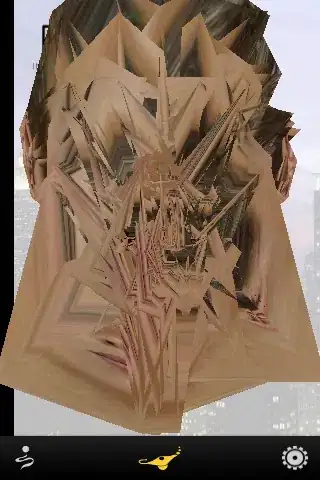

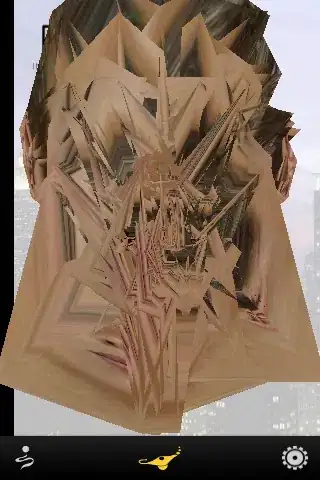

The code produces more or less the same image.