I just began exploring Apache Spark (following A gentle introduction to Apache Spark) under Windows 10, using pyspark. I got to the chapter about Structured Streaming, and I'm having a bit of trouble with cmd - whenever I start a stream, the cmd window becomes unusable, because Spark keeps "typing" stuff so even if I do type anything, it quickly disappears.

My code (taken directly from the book):

from pyspark.sql.functions import window, column, desc, col

staticDataFrame = spark.read.format("csv")\

.option("header", "true")\

.option("inferSchema", "true")\

.load("./data/retail-data/by-day/*.csv")

staticSchema = staticDataFrame.schema

streamingDataFrame = spark.readStream\

.schema(staticSchema)\

.option("maxFilesPerTrigger", 1)\

.format("csv")\

.option("header", "true")\

.load("./data/retail-data/by-day/*.csv")

purchaseByCustomerPerHour = streamingDataFrame\

.selectExpr(

"CustomerId",

"(UnitPrice * Quantity) as total_cost" ,

"InvoiceDate" )\

.groupBy(

col("CustomerId"), window(col("InvoiceDate"), "1 day"))\

.sum("total_cost")

purchaseByCustomerPerHour.writeStream\

.format("memory")\

.option('checkpointLocation','F:/Spark/sparktmp')\

.queryName("customer_purchases")\

.outputMode("complete")\

.start()

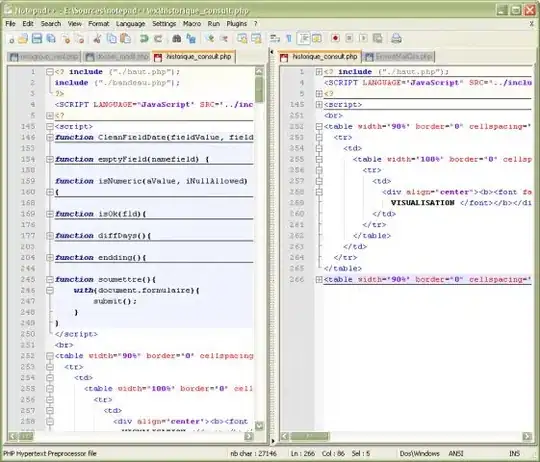

The issue I'm talking about:

The caret should be at the line where [Stage 6:======>] is. So if I wanted to query the stream (like the book suggests) I am unable to. And I cannot just open a second pyspark shell, since that would be a different Spark session. I'm also not sure whether the streaming job should start over when it exhausts all the input files ones (which it does) but I guess that's a topic for a different question.

Let me know if I should provide more information. Thank you in advance!