We know that Convolution layer in CNN uses filters and different filters will look for different information in the input image.

But let say in this SSD, we have prototxt file and it has specification for the convolution layer as

layer {

name: "conv2_1"

type: "Convolution"

bottom: "pool1"

top: "conv2_1"

param {

lr_mult: 1.0

decay_mult: 1.0

}

param {

lr_mult: 2.0

decay_mult: 0.0

}

convolution_param {

num_output: 128

pad: 1

kernel_size: 3

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0.0

}

}

}

All convolution layers in different networks like (GoogleNet, AlexNet, VGG etc) are more or less similar. Just look at that and how to understand, filters in this convolution layer try to extract which information of the input image?

EDIT: Let me clarify for my question. I see two convolutions layer from the prototxt file as follows. They are from SSD.

layer {

name: "conv1_1"

type: "Convolution"

bottom: "data"

top: "conv1_1"

param {

lr_mult: 1.0

decay_mult: 1.0

}

param {

lr_mult: 2.0

decay_mult: 0.0

}

convolution_param {

num_output: 64

pad: 1

kernel_size: 3

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0.0

}

}

}

layer {

name: "conv2_1"

type: "Convolution"

bottom: "pool1"

top: "conv2_1"

param {

lr_mult: 1.0

decay_mult: 1.0

}

param {

lr_mult: 2.0

decay_mult: 0.0

}

convolution_param {

num_output: 128

pad: 1

kernel_size: 3

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0.0

}

}

}

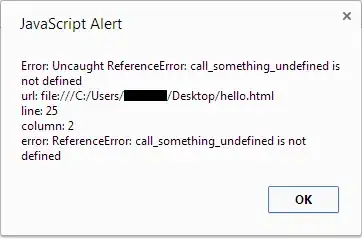

Then I print here of their outputs

Data

conv1_1 and conv2_1 images are here and here.

So my query is how these two conv layers produced different output. But no difference in prototxt file.