I can't understand why my dataframe is only on one node.

I have a cluster of 14 machines with 4 physical CPU on a spark standalone cluster.

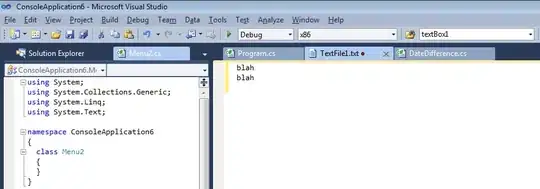

I am connected through a notebook and create my spark context :

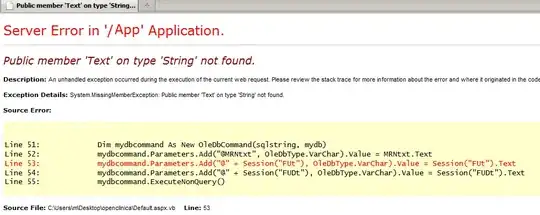

I expect a parralelism of 8 partitions, but when I create a dataframe I get only one partition :

What am I missing ?

Thanks to anser from user8371915 I repartitions my dataframe (I was reading a compressed file (.csv.gz) so I understand in splittable.

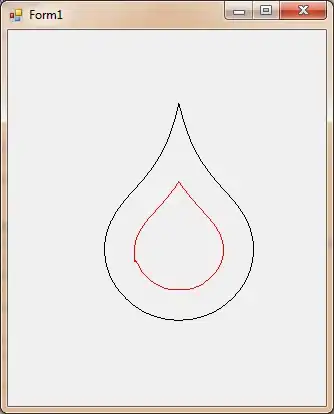

But When I do a "count" on it, I see it as being executed only on one executor :

Here namely on executor n°1, even if the file is 700 Mb large, and is on 6 blocks on HDFS.

As far as I understand, the calculus should be over 10 cores, over 5 nodes ... But everything is calculated only on one node :-(

Here namely on executor n°1, even if the file is 700 Mb large, and is on 6 blocks on HDFS.

As far as I understand, the calculus should be over 10 cores, over 5 nodes ... But everything is calculated only on one node :-(