If I understand your question correctly:

- You have an image view containing an image that may have been scaled down (or even scaled up) using

UIViewContentModeScaleAspectFit.

- You have a bezier path whose points are in the geometry (coordinate system) of that image view.

And now you want to create a copy of the image, at its original resolution, masked by the bezier path.

We can think of the image as having its own geometry, with the origin at the top left corner of the image and one unit along each axis being one point. So what we need to do is:

- Create a graphics renderer big enough to draw the image into without scaling. The geometry of this renderer is the image's geometry.

- Transform the bezier path from the view geometry to the renderer geometry.

- Apply the transformed path to the renderer's clip region.

- Draw the image (untransformed) into the renderer.

Step 2 is the hard one, because we have to come up with the correct CGAffineTransform. In an aspect-fit scenario, the transform needs to not only scale the image, but possibly translate it along either the x axis or the y axis (but not both). But let's be more general and support other UIViewContentMode settings. Here's a category that lets you ask a UIImageView for the transform that converts points in the view's geometry to points in the image's geometry:

@implementation UIImageView (ImageGeometry)

/**

* Return a transform that converts points in my geometry to points in the

* image's geometry. The origin of the image's geometry is at its upper

* left corner, and one unit along each axis is one point in the image.

*/

- (CGAffineTransform)imageGeometryTransform {

CGRect viewBounds = self.bounds;

CGSize viewSize = viewBounds.size;

CGSize imageSize = self.image.size;

CGFloat xScale = imageSize.width / viewSize.width;

CGFloat yScale = imageSize.height / viewSize.height;

CGFloat tx, ty;

switch (self.contentMode) {

case UIViewContentModeScaleToFill: tx = 0; ty = 0; break;

case UIViewContentModeScaleAspectFit:

if (xScale > yScale) { tx = 0; ty = 0.5; yScale = xScale; }

else if (xScale < yScale) { tx = 0.5; ty = 0; xScale = yScale; }

else { tx = 0; ty = 0; }

break;

case UIViewContentModeScaleAspectFill:

if (xScale < yScale) { tx = 0; ty = 0.5; yScale = xScale; }

else if (xScale > yScale) { tx = 0.5; ty = 0; xScale = yScale; }

else { tx = 0; ty = 0; imageSize = viewSize; }

break;

case UIViewContentModeCenter: tx = 0.5; ty = 0.5; xScale = yScale = 1; break;

case UIViewContentModeTop: tx = 0.5; ty = 0; xScale = yScale = 1; break;

case UIViewContentModeBottom: tx = 0.5; ty = 1; xScale = yScale = 1; break;

case UIViewContentModeLeft: tx = 0; ty = 0.5; xScale = yScale = 1; break;

case UIViewContentModeRight: tx = 1; ty = 0.5; xScale = yScale = 1; break;

case UIViewContentModeTopLeft: tx = 0; ty = 0; xScale = yScale = 1; break;

case UIViewContentModeTopRight: tx = 1; ty = 0; xScale = yScale = 1; break;

case UIViewContentModeBottomLeft: tx = 0; ty = 1; xScale = yScale = 1; break;

case UIViewContentModeBottomRight: tx = 1; ty = 1; xScale = yScale = 1; break;

default: return CGAffineTransformIdentity; // Mode not supported by UIImageView.

}

tx *= (imageSize.width - xScale * (viewBounds.origin.x + viewSize.width));

ty *= (imageSize.height - yScale * (viewBounds.origin.y + viewSize.height));

CGAffineTransform transform = CGAffineTransformMakeTranslation(tx, ty);

transform = CGAffineTransformScale(transform, xScale, yScale);

return transform;

}

@end

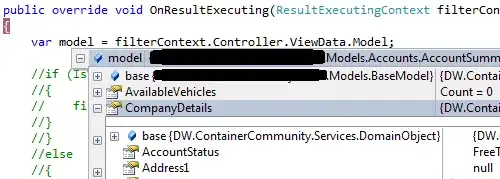

Armed with this, we can write the code that masks the image. In my test app, I have a subclass of UIImageView named PathEditingView that handles the bezier path editing. So my view controller creates the masked image like this:

- (UIImage *)maskedImage {

UIImage *image = self.pathEditingView.image;

UIGraphicsImageRendererFormat *format = [[UIGraphicsImageRendererFormat alloc] init];

format.scale = image.scale;

format.prefersExtendedRange = image.imageRendererFormat.prefersExtendedRange;

format.opaque = NO;

UIGraphicsImageRenderer *renderer = [[UIGraphicsImageRenderer alloc] initWithSize:image.size format:format];

return [renderer imageWithActions:^(UIGraphicsImageRendererContext * _Nonnull rendererContext) {

UIBezierPath *path = [self.pathEditingView.path copy];

[path applyTransform:self.pathEditingView.imageGeometryTransform];

CGContextRef gc = UIGraphicsGetCurrentContext();

CGContextAddPath(gc, path.CGPath);

CGContextClip(gc);

[image drawAtPoint:CGPointZero];

}];

}

And it looks like this:

Of course it's hard to tell that the output image is full-resolution. Let's fix that by cropping the output image to the bounding box of the bezier path:

- (UIImage *)maskedAndCroppedImage {

UIImage *image = self.pathEditingView.image;

UIBezierPath *path = [self.pathEditingView.path copy];

[path applyTransform:self.pathEditingView.imageGeometryTransform];

CGRect pathBounds = CGPathGetPathBoundingBox(path.CGPath);

UIGraphicsImageRendererFormat *format = [[UIGraphicsImageRendererFormat alloc] init];

format.scale = image.scale;

format.prefersExtendedRange = image.imageRendererFormat.prefersExtendedRange;

format.opaque = NO;

UIGraphicsImageRenderer *renderer = [[UIGraphicsImageRenderer alloc] initWithSize:pathBounds.size format:format];

return [renderer imageWithActions:^(UIGraphicsImageRendererContext * _Nonnull rendererContext) {

CGContextRef gc = UIGraphicsGetCurrentContext();

CGContextTranslateCTM(gc, -pathBounds.origin.x, -pathBounds.origin.y);

CGContextAddPath(gc, path.CGPath);

CGContextClip(gc);

[image drawAtPoint:CGPointZero];

}];

}

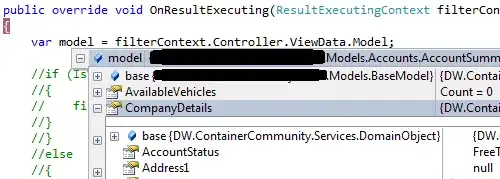

Masking and cropping together look like this:

You can see in this demo that the output image has much more detail than was visible in the input view, because it was generated at the full resolution of the input image.

Hi, I have a path (shape) and a high-resolution image. I make the high res image to be AspectFit inside the view on which I draw the path and I want to mask the image with the path but at the full resolution of the image, not at the resolution in which we see the path. The problem, It works perfectly when I don't upscale them up for high-resolution masking but when I do, everything is messed up. The mask gets stretched and the origins don't make sense.

Hi, I have a path (shape) and a high-resolution image. I make the high res image to be AspectFit inside the view on which I draw the path and I want to mask the image with the path but at the full resolution of the image, not at the resolution in which we see the path. The problem, It works perfectly when I don't upscale them up for high-resolution masking but when I do, everything is messed up. The mask gets stretched and the origins don't make sense.