I've also encountered this issue recently and raise a similar question with more details and with a recent approach. It seems to be an unsolved problem until now. There are some recent research works that try to address such problems with deep learning. Unfortunately, none of the works reach our expectations. However, I'm sharing the info in case it may come helpful to anyone.

1. Scene Text Image Super-Resolution in the Wild

In our case, it may be our last choice; comparatively, perform well enough. It's a recent research work (TSRN) mainly focuses on such cases. The main intuitive of it is to introduce super-resolution (SR) techniques as pre-processing. This implementation looks by far the most promising. Here is the illustration of their achievement, improve blur to clean image.

2. Neural Enhance

From their repo demonstration, It's appearing that It may have some potential to improve blur text either. However, the author probably doesn't maintain the repo for about 4 years.

3. Blind Motion Deblurring with GAN

The attractive part is the Blind Motion Deblurring mechanism in it, named DeblurGAN. It looks very promising.

4. Real-World Super-Resolution via Kernel Estimation and Noise Injection

An interesting fact about their work is that unlike other literary works they first design a novel degradation framework for realworld images by estimating various blur kernels as well as real noise distributions. Based on that they acquire LR images sharing a common domain with real-world images. Then, they propose a realworld super-resolution model aiming at better perception. From their article:

However, in my observation, I couldn't get the expected results. I've raised an issue on github and until now didn't get any response.

Convolutional Neural Networks for Direct Text Deblurring

The paper that was shared by @Ali looks very interesting and the outcomes are extremely good. It's nice that they have shared the pre-trained weight of their trained model and also shared python scripts for easier use. However, they've experimented with the Caffe library. I would prefer to convert into PyTorch to better control. Below are the provided python scripts with Caffe imports. Please note, I couldn't port it completely until now because of a lack of Caffe knowledge, please correct me if you are aware of it.

from __future__ import print_function

import numpy as np

import os, sys, argparse, glob, time, cv2, Queue, caffe

# Some Helper Functins

def getCutout(image, x1, y1, x2, y2, border):

assert(x1 >= 0 and y1 >= 0)

assert(x2 > x1 and y2 >y1)

assert(border >= 0)

return cv2.getRectSubPix(image, (y2-y1 + 2*border, x2-x1 + 2*border), (((y2-1)+y1) / 2.0, ((x2-1)+x1) / 2.0))

def fillRndData(data, net):

inputLayer = 'data'

randomChannels = net.blobs[inputLayer].data.shape[1]

rndData = np.random.randn(data.shape[0], randomChannels, data.shape[2], data.shape[3]).astype(np.float32) * 0.2

rndData[:,0:1,:,:] = data

net.blobs[inputLayer].data[...] = rndData[:,0:1,:,:]

def mkdirp(directory):

if not os.path.isdir(directory):

os.makedirs(directory)

The main function start here

def main(argv):

pycaffe_dir = os.path.dirname(__file__)

parser = argparse.ArgumentParser()

# Optional arguments.

parser.add_argument(

"--model_def",

help="Model definition file.",

required=True

)

parser.add_argument(

"--pretrained_model",

help="Trained model weights file.",

required=True

)

parser.add_argument(

"--out_scale",

help="Scale of the output image.",

default=1.0,

type=float

)

parser.add_argument(

"--output_path",

help="Output path.",

default=''

)

parser.add_argument(

"--tile_resolution",

help="Resolution of processing tile.",

required=True,

type=int

)

parser.add_argument(

"--suffix",

help="Suffix of the output file.",

default="-deblur",

)

parser.add_argument(

"--gpu",

action='store_true',

help="Switch for gpu computation."

)

parser.add_argument(

"--grey_mean",

action='store_true',

help="Use grey mean RGB=127. Default is the VGG mean."

)

parser.add_argument(

"--use_mean",

action='store_true',

help="Use mean."

)

parser.add_argument(

"--adversarial",

action='store_true',

help="Use mean."

)

args = parser.parse_args()

mkdirp(args.output_path)

if hasattr(caffe, 'set_mode_gpu'):

if args.gpu:

print('GPU mode', file=sys.stderr)

caffe.set_mode_gpu()

net = caffe.Net(args.model_def, args.pretrained_model, caffe.TEST)

else:

if args.gpu:

print('GPU mode', file=sys.stderr)

net = caffe.Net(args.model_def, args.pretrained_model, gpu=args.gpu)

inputs = [line.strip() for line in sys.stdin]

print("Classifying %d inputs." % len(inputs), file=sys.stderr)

inputBlob = net.blobs.keys()[0] # [innat]: input shape

outputBlob = net.blobs.keys()[-1]

print( inputBlob, outputBlob)

channelCount = net.blobs[inputBlob].data.shape[1]

net.blobs[inputBlob].reshape(1, channelCount, args.tile_resolution, args.tile_resolution)

net.reshape()

if channelCount == 1 or channelCount > 3:

color = 0

else:

color = 1

outResolution = net.blobs[outputBlob].data.shape[2]

inResolution = int(outResolution / args.out_scale)

boundary = (net.blobs[inputBlob].data.shape[2] - inResolution) / 2

for fileName in inputs:

img = cv2.imread(fileName, flags=color).astype(np.float32)

original = np.copy(img)

img = img.reshape(img.shape[0], img.shape[1], -1)

if args.use_mean:

if args.grey_mean or channelCount == 1:

img -= 127

else:

img[:,:,0] -= 103.939

img[:,:,1] -= 116.779

img[:,:,2] -= 123.68

img *= 0.004

outShape = [int(img.shape[0] * args.out_scale) ,

int(img.shape[1] * args.out_scale) ,

net.blobs[outputBlob].channels]

imgOut = np.zeros(outShape)

imageStartTime = time.time()

for x, xOut in zip(range(0, img.shape[0], inResolution), range(0, imgOut.shape[0], outResolution)):

for y, yOut in zip(range(0, img.shape[1], inResolution), range(0, imgOut.shape[1], outResolution)):

start = time.time()

region = getCutout(img, x, y, x+inResolution, y+inResolution, boundary)

region = region.reshape(region.shape[0], region.shape[1], -1)

data = region.transpose([2, 0, 1]).reshape(1, -1, region.shape[0], region.shape[1])

if args.adversarial:

fillRndData(data, net)

out = net.forward()

else:

out = net.forward_all(data=data)

out = out[outputBlob].reshape(out[outputBlob].shape[1], out[outputBlob].shape[2], out[outputBlob].shape[3]).transpose(1, 2, 0)

if imgOut.shape[2] == 3 or imgOut.shape[2] == 1:

out /= 0.004

if args.use_mean:

if args.grey_mean:

out += 127

else:

out[:,:,0] += 103.939

out[:,:,1] += 116.779

out[:,:,2] += 123.68

if out.shape[0] != outResolution:

print("Warning: size of net output is %d px and it is expected to be %d px" % (out.shape[0], outResolution))

if out.shape[0] < outResolution:

print("Error: size of net output is %d px and it is expected to be %d px" % (out.shape[0], outResolution))

exit()

xRange = min((outResolution, imgOut.shape[0] - xOut))

yRange = min((outResolution, imgOut.shape[1] - yOut))

imgOut[xOut:xOut+xRange, yOut:yOut+yRange, :] = out[0:xRange, 0:yRange, :]

imgOut[xOut:xOut+xRange, yOut:yOut+yRange, :] = out[0:xRange, 0:yRange, :]

print(".", end="", file=sys.stderr)

sys.stdout.flush()

print(imgOut.min(), imgOut.max())

print("IMAGE DONE %s" % (time.time() - imageStartTime))

basename = os.path.basename(fileName)

name = os.path.join(args.output_path, basename + args.suffix)

print(name, imgOut.shape)

cv2.imwrite( name, imgOut)

if __name__ == '__main__':

main(sys.argv)

To run the program:

cat fileListToProcess.txt | python processWholeImage.py --model_def

./BMVC_nets/S14_19_200.deploy --pretrained_model

./BMVC_nets/S14_19_FQ_178000.model --output_path ./out/

--tile_resolution 300 --suffix _out.png --gpu --use_mean

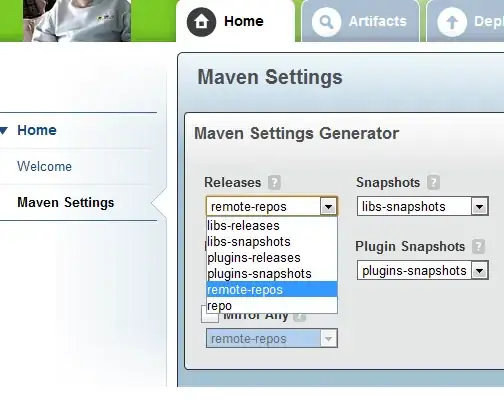

The weight files and also the above scripts can be download from here (BMVC_net). However, you may want to convert caffe2pytorch. In order to do that, here is the basic starting point:

Next,

# BMVC_net, you need to download it from authors website, link above

model = caffemodel2pytorch.Net(

prototxt = './BMVC_net/S14_19_200.deploy',

weights = './BMVC_net/S14_19_FQ_178000.model',

caffe_proto = 'https://raw.githubusercontent.com/BVLC/caffe/master/src/caffe/proto/caffe.proto'

)

model.cuda()

model.eval()

torch.set_grad_enabled(False)

Run-on a demo tensor,

# make sure to have right procedure of image normalization and channel reordering

image = torch.Tensor(8, 3, 98, 98).cuda()

# outputs dict of PyTorch Variables

# in this example the dict contains the only key "prob"

#output_dict = model(data = image)

# you can remove unneeded layers:

#del model.prob

#del model.fc8

# a single input variable is interpreted as an input blob named "data"

# in this example the dict contains the only key "fc7"

output_dict = model(image)

# print(output_dict)

print(output_dict.keys())

Please note, there are some basic things to consider; the networks expect text at DPI 120-150, reasonable orientation, and reasonable black and white levels. The networks expect to mean [103.9, 116.8, 123.7] to be subtracted from inputs. The inputs should be further multiplied by 0.004.

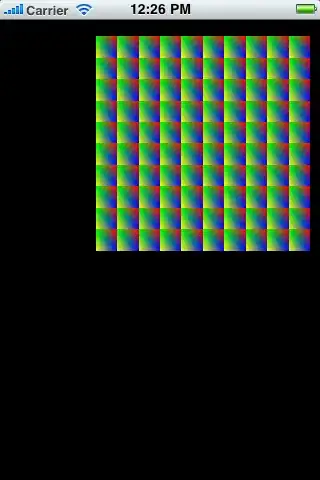

This is a part of the business card and it is one of the frames taken by the camera and without proper focus.

This is a part of the business card and it is one of the frames taken by the camera and without proper focus. I'm looking for a method that could give me an image of better quality, so that image could be recognized by OCR, but also should be quite fast. The image is not blurred too much (I think so) but isn't good for OCR. I tried:

I'm looking for a method that could give me an image of better quality, so that image could be recognized by OCR, but also should be quite fast. The image is not blurred too much (I think so) but isn't good for OCR. I tried: