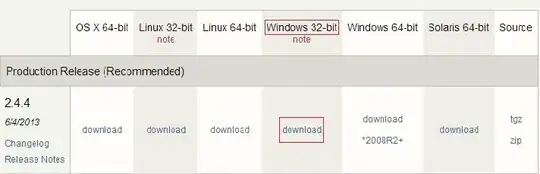

I have the following plot:

The model is created with the following number of samples:

class1 class2

train 20 20

validate 21 13

In my understanding, the plot show there is no overfitting. But I think, since the sample is very small, I'm not confident if the model is general enough.

Is there any other way to measure overfittingness other than the above plot?

This is my complete code:

library(keras)

library(tidyverse)

train_dir <- "data/train/"

validation_dir <- "data/validate/"

# Making model ------------------------------------------------------------

conv_base <- application_vgg16(

weights = "imagenet",

include_top = FALSE,

input_shape = c(150, 150, 3)

)

# VGG16 based model -------------------------------------------------------

# Works better with regularizer

model <- keras_model_sequential() %>%

conv_base() %>%

layer_flatten() %>%

layer_dense(units = 256, activation = "relu", kernel_regularizer = regularizer_l1(l = 0.01)) %>%

layer_dense(units = 1, activation = "sigmoid")

summary(model)

length(model$trainable_weights)

freeze_weights(conv_base)

length(model$trainable_weights)

# Train model -------------------------------------------------------------

desired_batch_size <- 20

train_datagen <- image_data_generator(

rescale = 1 / 255,

rotation_range = 40,

width_shift_range = 0.2,

height_shift_range = 0.2,

shear_range = 0.2,

zoom_range = 0.2,

horizontal_flip = TRUE,

fill_mode = "nearest"

)

# Note that the validation data shouldn't be augmented!

test_datagen <- image_data_generator(rescale = 1 / 255)

train_generator <- flow_images_from_directory(

train_dir, # Target directory

train_datagen, # Data generator

target_size = c(150, 150), # Resizes all images to 150 × 150

shuffle = TRUE,

seed = 1,

batch_size = desired_batch_size, # was 20

class_mode = "binary" # binary_crossentropy loss for binary labels

)

validation_generator <- flow_images_from_directory(

validation_dir,

test_datagen,

target_size = c(150, 150),

shuffle = TRUE,

seed = 1,

batch_size = desired_batch_size,

class_mode = "binary"

)

# Fine tuning -------------------------------------------------------------

unfreeze_weights(conv_base, from = "block3_conv1")

# Compile model -----------------------------------------------------------

model %>% compile(

loss = "binary_crossentropy",

optimizer = optimizer_rmsprop(lr = 2e-5),

metrics = c("accuracy")

)

# Evaluate by epochs ---------------------------------------------------------------

# # This create plots accuracy of various epochs (slow)

history <- model %>% fit_generator(

train_generator,

steps_per_epoch = 100,

epochs = 15, # was 50

validation_data = validation_generator,

validation_steps = 50

)

plot(history)