I'm trying to take two images using the camera, and align them using the iOS Vision framework:

func align(firstImage: CIImage, secondImage: CIImage) {

let request = VNTranslationalImageRegistrationRequest(

targetedCIImage: firstImage) {

request, error in

if error != nil {

fatalError()

}

let observation = request.results!.first

as! VNImageTranslationAlignmentObservation

secondImage = secondImage.transformed(

by: observation.alignmentTransform)

let compositedImage = firstImage!.applyingFilter(

"CIAdditionCompositing",

parameters: ["inputBackgroundImage": secondImage])

// Save the compositedImage to the photo library.

}

try! visionHandler.perform([request], on: secondImage)

}

let visionHandler = VNSequenceRequestHandler()

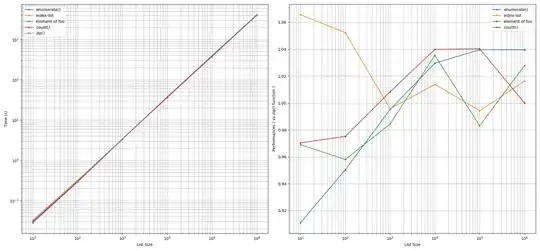

But this produces grossly mis-aligned images:

You can see that I've tried three different types of scenes — a close-up subject, an indoor scene, and an outdoor scene. I tried more outdoor scenes, and the result is the same in almost every one of them.

I was expecting a slight misalignment at worst, but not such a complete misalignment. What is going wrong?

I'm not passing the orientation of the images into the Vision framework, but that shouldn't be a problem for aligning images. It's a problem only for things like face detection, where a rotated face isn't detected as a face. In any case, the output images have the correct orientation, so orientation is not the problem.

My compositing code is working correctly. It's only the Vision framework that's a problem. If I remove the calls to the Vision framework, put the phone of a tripod, the composition works perfectly. There's no misalignment. So the problem is the Vision framework.

This is on iPhone X.

How do I get Vision framework to work correctly? Can I tell it to use gyroscope, accelerometer and compass data to improve the alignment?