I had assumed that the time cost of insertion is log to the number of records. But my test in SQLite 3.22  seems showing it is linear. Note both X/Y are in log scale.

seems showing it is linear. Note both X/Y are in log scale.

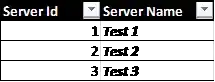

The size(k) column is the number of rows I inserted at each test. Its unit is K. I did 3 tests. Journal and synchronous are off. Locking_mode is exclusive. All operations are included in one transaction.

time1

create table t1 (id primary key, name text);

create index nameIdx on t1(name)

// for i = [1:<size>]

// insert into t1 values(i, "foo"i)

create table t2 (id primary key, value int);

// for i = [1:<size>]

// insert into t2 values(i, i)

time2

create table t1 (id primary key, name text);

// for i = [1:<size>]

// insert into t1 values(i, "foo"i)

create index nameIdx on t1(name)

create table t2 (id primary key, value int);

// for i = [1:<size>]

// insert into t2 values(i, i)

time3

create table t1 (id primary key, name text, value int);

// for i = [1:<size>]

// insert into t1 values(i, "foo"i, 0)

create index nameIdx on t1(name)

// for i = [1:<size>]

// update t1 set value=0 where id=<i>

All the 3 test cases have similar costs. They seem linear. Also I had thought case 3 can be faster because update does not need rebalance tree or add new records. But case3 is a little bit slower...

Are the results expected? Maybe my input data are too small to see the log complexity?