I'm working on Crawler which gets a list of domains and for every domain, counts number of urls on this page.

I use CrawlSpider for this purpose but there is a problem.

When I start crawling, it seems to send multiple requests to multiple domains but after some time (one minute), it ends crawling one page (domain).

SETTINGS

CONCURRENT_REQUESTS = 100

CONCURRENT_REQUESTS_PER_DOMAIN = 3

REACTOR_THREADPOOL_MAXSIZE = 20

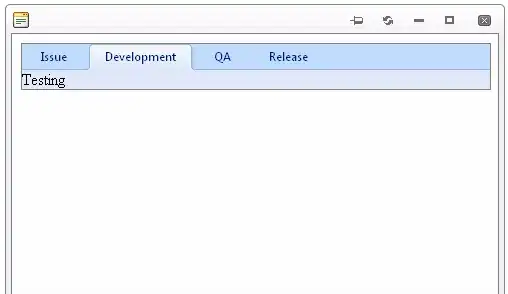

Here you can see how many urls has been scraped for particular domain:

AFTER 7 minutes - as you can see it aims only on first domain and forgot about others

If scrapy aims just on one domain at once, it logically slows down process. I would like to send requests to multiple domains in short time.

class MainSpider(CrawlSpider):

name = 'main_spider'

allowed_domains = []

rules = (

Rule(LinkExtractor(), callback='parse_item', follow=True, ),

)

def start_requests(self):

for d in Domain.objects.all():

self.allowed_domains.append(d.name)

yield scrapy.Request(d.main_url, callback=self.parse_item, meta={'domain': d})

def parse_start_url(self, response):

self.parse_item(response)

def parse_item(self, response):

d = response.meta['domain']

d.number_of_urls = d.number_of_urls + 1

d.save()

extractor = LinkExtractor(allow_domains=d.name)

links = extractor.extract_links(response)

for link in links:

yield scrapy.Request(link.url, callback=self.parse_item,meta={'domain': d})

It seems to focus only on the first domain until it doesn't scrape it all.