The method hashCode() in class Enum is final and defined as super.hashCode(), which means it returns a number based on the address of the instance, which is a random number from programmers POV.

Defining it e.g. as ordinal() ^ getClass().getName().hashCode() would be deterministic across different JVMs. It would even work a bit better, since the least significant bits would "change as much as possible", e.g., for an enum containing up to 16 elements and a HashMap of size 16, there'd be for sure no collisions (sure, using an EnumMap is better, but sometimes not possible, e.g. there's no ConcurrentEnumMap). With the current definition you have no such guarantee, have you?

Summary of the answers

Using Object.hashCode() compares to a nicer hashCode like the one above as follows:

- PROS

- simplicity

- CONTRAS

- speed

- more collisions (for any size of a HashMap)

- non-determinism, which propagates to other objects making them unusable for

- deterministic simulations

- ETag computation

- hunting down bugs depending e.g. on a

HashSetiteration order

I'd personally prefer the nicer hashCode, but IMHO no reason weights much, maybe except for the speed.

UPDATE

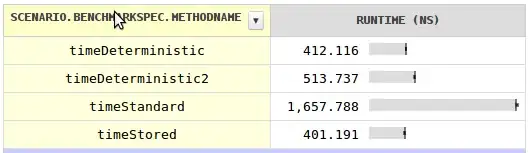

I was curious about the speed and wrote a benchmark with surprising results. For a price of a single field per class you can a deterministic hash code which is nearly four times faster. Storing the hash code in each field would be even faster, although negligibly.

The explanation why the standard hash code is not much faster is that it can't be the object's address as objects gets moved by the GC.

UPDATE 2

There are some strange things going on with the hashCode performance in general. When I understand them, there's still the open question, why System.identityHashCode (reading from the object header) is way slower than accessing a normal object field.