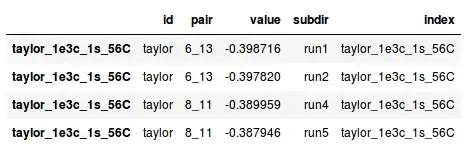

I have a pandas DataFrame containing some values:

id pair value subdir

taylor_1e3c_1s_56C taylor 6_13 -0.398716 run1

taylor_1e3c_1s_56C taylor 6_13 -0.397820 run2

taylor_1e3c_1s_56C taylor 6_13 -0.397310 run3

taylor_1e3c_1s_56C taylor 6_13 -0.390520 run4

taylor_1e3c_1s_56C taylor 6_13 -0.377390 run5

taylor_1e3c_1s_56C taylor 8_11 -0.393604 run1

taylor_1e3c_1s_56C taylor 8_11 -0.392899 run2

taylor_1e3c_1s_56C taylor 8_11 -0.392473 run3

taylor_1e3c_1s_56C taylor 8_11 -0.389959 run4

taylor_1e3c_1s_56C taylor 8_11 -0.387946 run5

what I would like to do is to isolate the rows that have the same index, id, and pair, compute the mean and the standard deviation over the value column, and put it all in a new dataframe. Because I have now effectively averaged over all the possible values of subdir, that column should also be removed. So the output should look something like this

id pair value error

taylor_1e3c_1s_56C taylor 6_13 -0.392351 0.013213

taylor_1e3c_1s_56C taylor 8_11 -0.391376 0.016432

How should I do it in pandas?

A previous question showed me how to just get the mean - but it's not clear to me how to generalise this to get the error on the mean (aka the standard deviation) as well.

Thank you much to everyone :)