I'm writing a perceptron learning algorithm on simulated data. However the program runs into infinite loop and weight tends to be very large. What should I do to debug my program? If you can point out what's going wrong, it'd be also appreciated.

What I'm doing here is first generate some data points at random and assign label to them according to the linear target function. Then use perceptron learning to learn this linear function. Below is the labelled data if I use 100 samples.

Also, this is Exercise 1.4 on book Learning from Data.

import numpy as np

a = 1

b = 1

def target(x):

if x[1]>a*x[0]+b:

return 1

else:

return -1

def gen_y(X_sim):

return np.array([target(x) for x in X_sim])

def pcp(X,y):

w = np.zeros(2)

Z = np.hstack((X,np.array([y]).T))

while ~all(z[2]*np.dot(w,z[:2])>0 for z in Z): # some training sample is missclassified

i = np.where(y*np.dot(w,x)<0 for x in X)[0][0] # update the weight based on misclassified sample

print(i)

w = w + y[i]*X[i]

return w

if __name__ == '__main__':

X = np.random.multivariate_normal([1,1],np.diag([1,1]),20)

y = gen_y(X)

w = pcp(X,y)

print(w)

The w I got is going to infinity.

[-1.66580705 1.86672845]

[-3.3316141 3.73345691]

[-4.99742115 5.60018536]

[-6.6632282 7.46691382]

[-8.32903525 9.33364227]

[ -9.99484231 11.20037073]

[-11.66064936 13.06709918]

[-13.32645641 14.93382763]

[-14.99226346 16.80055609]

[-16.65807051 18.66728454]

[-18.32387756 20.534013 ]

[-19.98968461 22.40074145]

[-21.65549166 24.26746991]

[-23.32129871 26.13419836]

[-24.98710576 28.00092682]

[-26.65291282 29.86765527]

[-28.31871987 31.73438372]

[-29.98452692 33.60111218]

[-31.65033397 35.46784063]

[-33.31614102 37.33456909]

[-34.98194807 39.20129754]

[-36.64775512 41.068026 ]

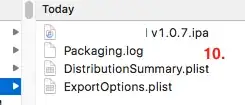

The textbook says:

The question is here:

Aside question: I actually don't get why this update rule will work. Is there a good geometric intuition of how this works? Clearly the book has given none. The update rule is simply w(t+1)=w(t)+y(t)x(t) wherever x,y is misclassified i.e. y!=sign(w^T*x)

Following one of the answer,

import numpy as np

np.random.seed(0)

a = 1

b = 1

def target(x):

if x[1]>a*x[0]+b:

return 1

else:

return -1

def gen_y(X_sim):

return np.array([target(x) for x in X_sim])

def pcp(X,y):

w = np.ones(3)

Z = np.hstack((np.array([np.ones(len(X))]).T,X,np.array([y]).T))

while not all(z[3]*np.dot(w,z[:3])>0 for z in Z): # some training sample is missclassified

print([z[3]*np.dot(w,z[:3])>0 for z in Z])

print(not all(z[3]*np.dot(w,z[:3])>0 for z in Z))

i = np.where(z[3]*np.dot(w,z[:3])<0 for z in Z)[0][0] # update the weight based on misclassified sample

w = w + Z[i,3]*Z[i,:3]

print([z[3]*np.dot(w,z[:3])>0 for z in Z])

print(not all(z[3]*np.dot(w,z[:3])>0 for z in Z))

print(i,w)

return w

if __name__ == '__main__':

X = np.random.multivariate_normal([1,1],np.diag([1,1]),20)

y = gen_y(X)

# import matplotlib.pyplot as plt

# plt.scatter(X[:,0],X[:,1],c=y)

# plt.scatter(X[1,0],X[1,1],c='red')

# plt.show()

w = pcp(X,y)

print(w)

This is still not working and prints

[False, True, False, False, False, True, False, False, False, False, True, False, False, False, False, False, False, False, False, False]

True

[True, False, True, True, True, False, True, True, True, True, True, True, True, True, True, True, False, True, True, True]

True

0 [ 0. -1.76405235 -0.40015721]

[True, False, True, True, True, False, True, True, True, True, True, True, True, True, True, True, False, True, True, True]

True

[True, False, True, True, True, False, True, True, True, True, True, True, True, True, True, True, False, True, True, True]

True

0 [-1. -4.52810469 -1.80031442]

[True, False, True, True, True, False, True, True, True, True, True, True, True, True, True, True, False, True, True, True]

True

[True, False, True, True, True, False, True, True, True, True, True, True, True, True, True, True, False, True, True, True]

True

0 [-2. -7.29215704 -3.20047163]

[True, False, True, True, True, False, True, True, True, True, True, True, True, True, True, True, False, True, True, True]

True

[True, False, True, True, True, False, True, True, True, True, True, True, True, True, True, True, False, True, True, True]

True

0 [ -3. -10.05620938 -4.60062883]

[True, False, True, True, True, False, True, True, True, True, True, True, True, True, True, True, False, True, True, True]

True

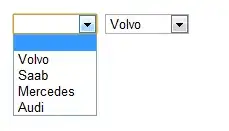

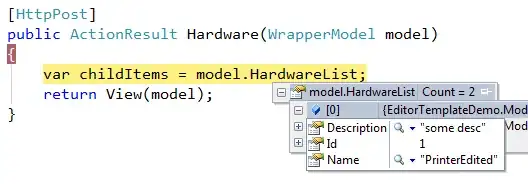

It seems that 1. only the three +1 are false, this is indicated in below graph. 2. index returned by a premise similar to Matlab find is wrong.