-Edit

I created a bug report to follow the issue

I am trying to upload a directory to my server. The folder contains big files including CT scan images. It's working fine but I have memory issues.

document.getElementById("folderInput").addEventListener('change', doThing);

function doThing(){

var filesArray = Array.from(event.target.files);

readmultifiles(filesArray).then(function(results){

console.log("Result read :"+results.length);

})

}

function readmultifiles(files) {

const results = [];

return files.reduce(function(p, file) {

return p.then(function() {

return readFile(file).then(function(data) {

// put this result into the results array

results.push(data);

});

});

}, Promise.resolve()).then(function() {

// make final resolved value be the results array

console.log("Returning results");

return results;

});

}

function readFile(file) {

const reader = new FileReader();

return new Promise(function(resolve, reject) {

reader.onload = function(e) {

resolve(e.target.result);

};

reader.onerror = reader.onabort = reject;

reader.readAsArrayBuffer(file);

});

}

JSFiddle of the solution - Using response from this question

In this example, I do nothing with the data but you can see the memory usage growing.

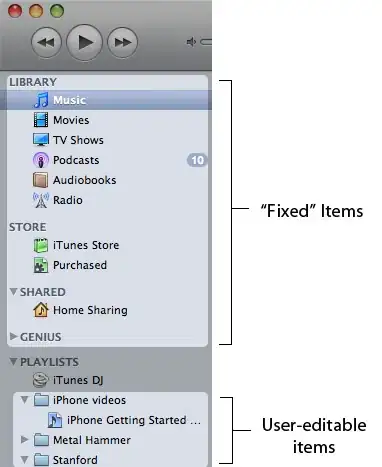

Memory usage before uploading:

Memory usage after uploading:

The file folder uploaded is 342Mb so it makes sense but memory should be free, right?

If you have any idea to prevent this or maybe there is another API I could use instead of FileReader?

EDIT-----

I think this is definitely a bug linked to Chrome and V8. The memory is freed when I try on Firefox. It might be link to this bug