I'm trying to get this table http://www.datamystic.com/timezone/time_zones.html into array format so I can do whatever I want with it. Preferably in PHP, python or JavaScript.

This is the kind of problem that comes up a lot, so rather than looking for help with this specific problem, I'm looking for ideas on how to solve all similar problems.

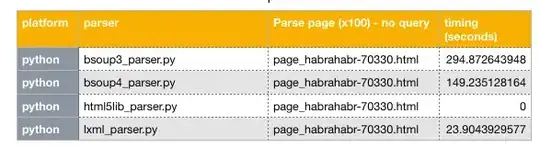

BeautifulSoup is the first thing that comes to mind. Another possibility is copying/pasting it in TextMate and then running regular expressions.

What do you suggest?

This is the script that I ended up writing, but as I said, I'm looking for a more general solution.

from BeautifulSoup import BeautifulSoup

import urllib2

url = 'http://www.datamystic.com/timezone/time_zones.html';

response = urllib2.urlopen(url)

html = response.read()

soup = BeautifulSoup(html)

tables = soup.findAll("table")

table = tables[1]

rows = table.findAll("tr")

for row in rows:

tds = row.findAll('td')

if(len(tds)==4):

countrycode = tds[1].string

timezone = tds[2].string

if(type(countrycode) is not type(None) and type(timezone) is not type(None)):

print "\'%s\' => \'%s\'," % (countrycode.strip(), timezone.strip())

Comments and suggestions for improvement to my python code welcome, too ;)