I am just getting start with deep reinforcement learning and i am trying to crasp this concept.

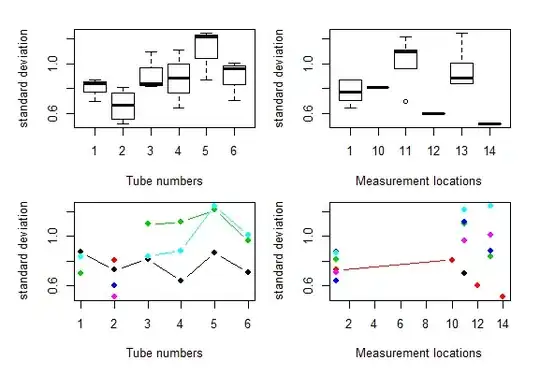

I have this deterministic bellman equation

When i implement stochastacity from the MDP then i get 2.6a

My equation is this assumption correct. I saw this implementation 2.6a without a policy sign on the state value function. But to me this does not make sense due to i am using the probability of which different next steps i could end up in. Which is the same as saying policy, i think. and if yes 2.6a is correct, can i then assume that the rest (2.6b and 2.6c) because then i would like to write the action state function like this:

The reason why i am doing it like this is because i would like to explain myself from a deterministic point of view to a non-deterministic point of view.

I hope someone out there can help on this one!

Best regards Søren Koch